Journal Week 2 - HoangMinh45/CS526 GitHub Wiki

CS 526 F20****15 Computer Graphics 2 : Weekly Research and Project Journal

I. Articles Summary

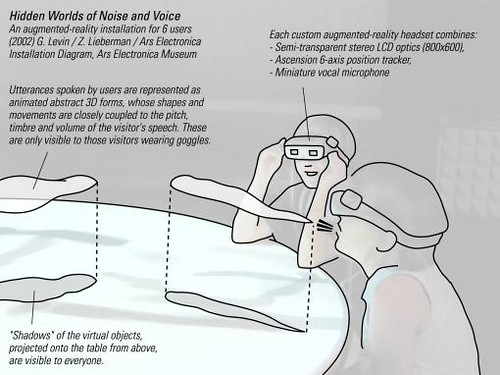

1. Levin, Golan, and Zachary Lieberman. "In-situ speech visualization in real-time interactive installation and performance." NPAR. Vol. 4. 2004.

Article link - International Symposium on Non-Photorealistic Animation and Rendering 2004

If we can visualize our speech, how does it look like. This paper presents 3 artworks to explore the image of voice/speech. And it takes inspiration from the animation Reci Reci Reci (Word Word Word) by Czech artist Michaela Pavlatova. Speech is visualized in different shapes and their forms or colors show the emotional aspect of the speech.

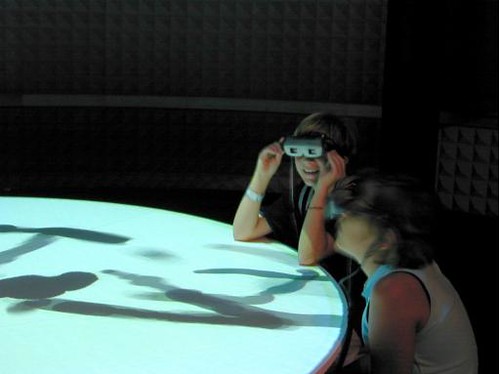

Artwork 1:Hidden World

Voice/Speech comming out of participants are visualized as interesting cloud shape, and can be seen through VR goggles. Speeches is segmented, analyzed and visualized. Duration --> length of the shape; Volume --> Diameter; Pitch --> Movement characteristic

Artwork 2:RE:MARK

Similar to Hidden World, voice/speech of participants is visualized. This time, the speech is analyzed, if a certain phonemes are recognized --> display that phoneme; if not, displayed shaped based on the timbral character of vocalization: the higher the frequency the pointier and more irregular the shape is. Use CV to make shape displayed just like they come out of participants' heads.

Performance: Messa di Voce

Similar to RE:MARK, but this time incorporating body movement interaction.

Personal Comment: The 3 artworks are very fascinating (interest a lot of kids when in exhibition). They help to answer the question "what does speech look like", and the aesthetic aspect of speech.

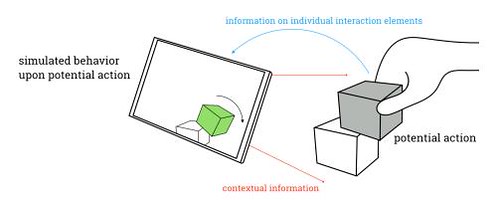

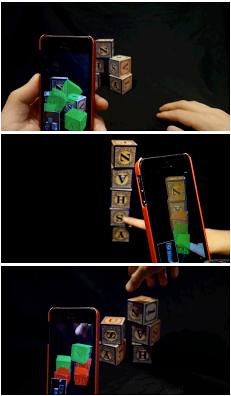

2. Leigh, Sang-won, and Pattie Maes. "AfterMath: Visualizing Consequences of Actions through Augmented Reality." Proceedings of the 33rd Annual ACM Conference Extended Abstracts on Human Factors in Computing Systems. ACM, 2015.

Article link - CHI EA '15 Proceedings of the 33rd Annual ACM Conference Extended Abstracts on Human Factors in Computing Systems

This paper presents AfterMath, a user interface concept to predict and display consequence of users' actions. In this research, the authors built an prototype and use physics simulation and AR Technology to demonstrate the use of such system.

This system will help us make better decision based on what we learn about in the potential consequences.

Related works in AR : Sixthsense (display info on existing objects), FreeD (use AR as guidance/steps to do something). But there hasn't been any research on AR-based afforded consequence.

There are 2 types of Afforded Consequences Prediction

- Model-driven Prediction : based on physics simulation

- Data-driven Prediction : observe the object overtime --> make prediction based on database/machine learning

Potential Application

Make better decision in irreversible actions.

Or in situation that human cannot make precise prediction.

Discussion

How can best visualize the consequence according to context: some need specific, and some need abstract

Personal Comment: The research (WIP) has a wide range of application in several fields --> make better decisions: manual creation, action predictions in rescue, civilian/military use.

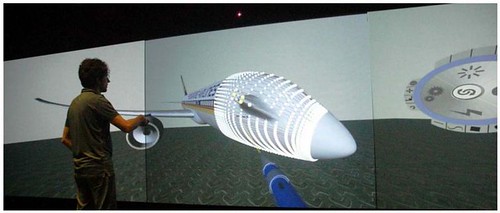

3. Martini, Andrea, et al. "A novel 3D user interface for the immersive design review." 3D User Interfaces (3DUI), 2015 IEEE Symposium on. IEEE, 2015.

Article link - 2015 IEEE Symposium on 3D User Interfaces

This paper presents a hardware/software platform used in design review session. The system allows user to interact with CAD and visualize different data types simultaneously. The work includes the development of an immersive interface, and a smart 3D disk (projected on to the display) to interact with virtual object.

The software part of the system is coded in C++ based on popular VR libraries, such as OpenSceneGraph, Delta3D, OpenCascade and VRPN. The software includes the Dune core, components, engines, IO and visualization. The user uses the Wii controller and Kinect to interact with virtual objects as well as the 3D smart disk.

The system has been used and evaluated by both designers and people from outside of design field, and they both gave good feedback about the smart disk. But more works will need to be done to improve the usability.

II. Symposium

2015 Workshop on Computational Aesthetics (Expressive 2015 - Joint Symposium on Computational Aesthetics and Sketch Based Interfaces and Modeling and Non-Photorealistic Animation and Rendering - Istanbul, Turkey — June 20 - 22, 2015)

Proceeding link

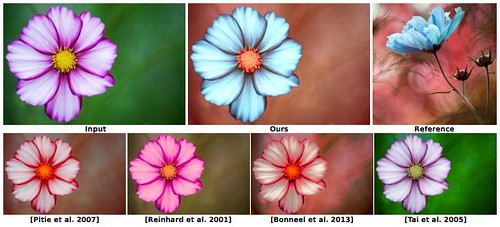

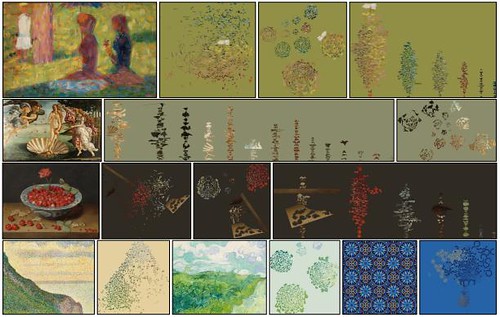

The workshop was divided in to 3 sessions: Rendering and modeling, Stylization, Working with images. In particular, I am most interested in the Stylization and Working with Images sessions, the research papers in these 2 sessions are fascinating and beautiful:

Style-aware robust color transfer

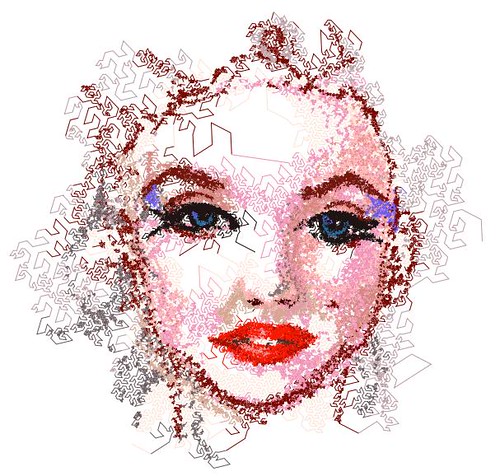

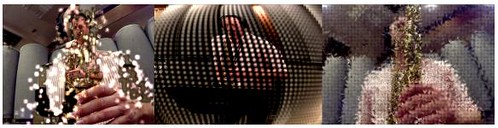

Shake it up - image decomposition and rearrangements of its constituents

Image stylization by oil paint filtering using color palettes

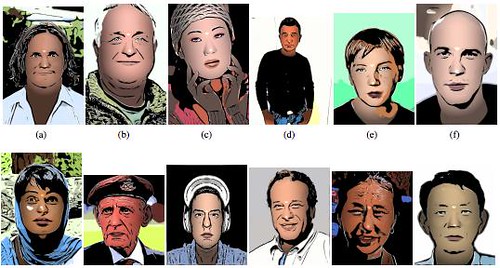

Non-photorealistic rendering of portraits

These works are every interesting since either they explore new ideas, such as combine video and sound in video granular synthesis, or complex algorithms in Painting with flowsnakes, and Image stylization by oil paint filtering using color palettes, or funny idea+complex algorithm in Non-photorealistic rendering of portraits.

III. Ideas

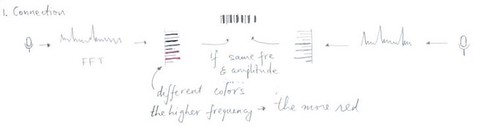

1. SoundConnection

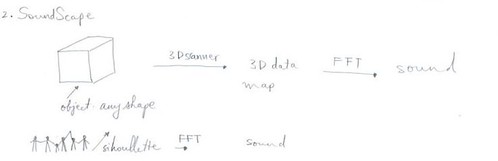

2. SoundScape

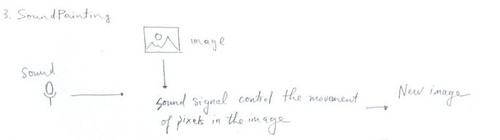

3. SoundPainting