pFlogger_UserGuide - GEOS-ESM/MAPL GitHub Wiki

In High Performance Computing (HPC) applications, the use of text-base

messages (for instance using print statements) can be cumbersome.

The messages may not be organized enough to contain critical information

developers and users need to understand the behavior of their applications.

The typical problems we may encounter are:

- Important messages obscured by fountain of routine messages

- Performance

- User adds a print statement in an inner loop or across all processes

- Anonymity – important message of unknown origin

- Which process

- Which software component

- Loss of productivity

- Recompile to activate low-level debug diagnostics

In HPC software running on multi-core platforms, we need to have a framework that facilitate the creation of text-base messages providing useful information on the behavior of the software so as to help users to debug and track down errors systematically. The framework needs to be able to:

- Route warnings and errors to prominent location

- And profiler data and …

- Suppress low severity (”debugging”) messages

- Suppress duplicate messages on sibling processes

- Annotate messages with:

- Time stamp

- Process rank

- Software component

- Other application-specific metadata

All these features need to be done dynamically at run time (without recompiling the application).

pFLogger (parallel Fortran logger)

mimics the Python logging module

by implementing its features to support Fortran HPC applications.

As logging, pFLogger enables Fortran code developpers and users

to better control where, how, and what they log, with much more granularity.

They can then reduce debugging time, improve code quality, and increase the visibility of their applications.

The Python logging module

provides a flexible framework for

producing log messages from Python applications.

It provides a way for applications to configure different log handlers and a way of routing log messages to these handlers.

It is used to monitor applications by for tracking and recording events that occur,

such as errors, warnings, or other important information.

The produced log messages can be formatted to meet different requirements,

customized with timestamps,

sent to different destinations (terminal, files),

and filtered based on their severity.

This monitoring helps diagnose issues, understand runtime behavior,

and analyze user interactions, offering insights into design decisions.

It can help developers easily identify areas where their code needs to be improved or better optimised.

This leads to faster development cycles, fewer bugs and higher-quality code.

The main components of the logging module are:

- Loggers: expose an interface that an application can use to log messages at run time. They also determine which log messages to act upon based upon severity (the default filtering facility).

-

Handlers: send the logs created by loggers to their destination. Popular handlers include:

-

FileHandler: For sending log messages to a file -

StreamHandler: For sending log messages to an output stream like stdout -

MemoryHandler: For sending messages to a buffer in memory, which is flushed whenever specific criteria are met.` -

HTTPHandler: For sending log messages with HTTP -

SysLogHandler: For sending messages to a Unix syslog daemon, possibly on a remote machine.

-

- Filters: provide a mechanism to determine which logs are recorded. They are used to perform more advanced filtering than level filtering.

- Formatters: determine the output formatting of log messages. They are used by the Python logging handlers to enhance the information available through LogRecord attributes.

To start with Python logging in an existing workflow,

- First, create a logger object using the

basicConfig()`` method from thelogging`` module. - Then, set up handlers for each type of log message to be captured (e.g., debug messages or errors).

- Finally, add filters and formatters as needed to customize how logs are displayed or stored.

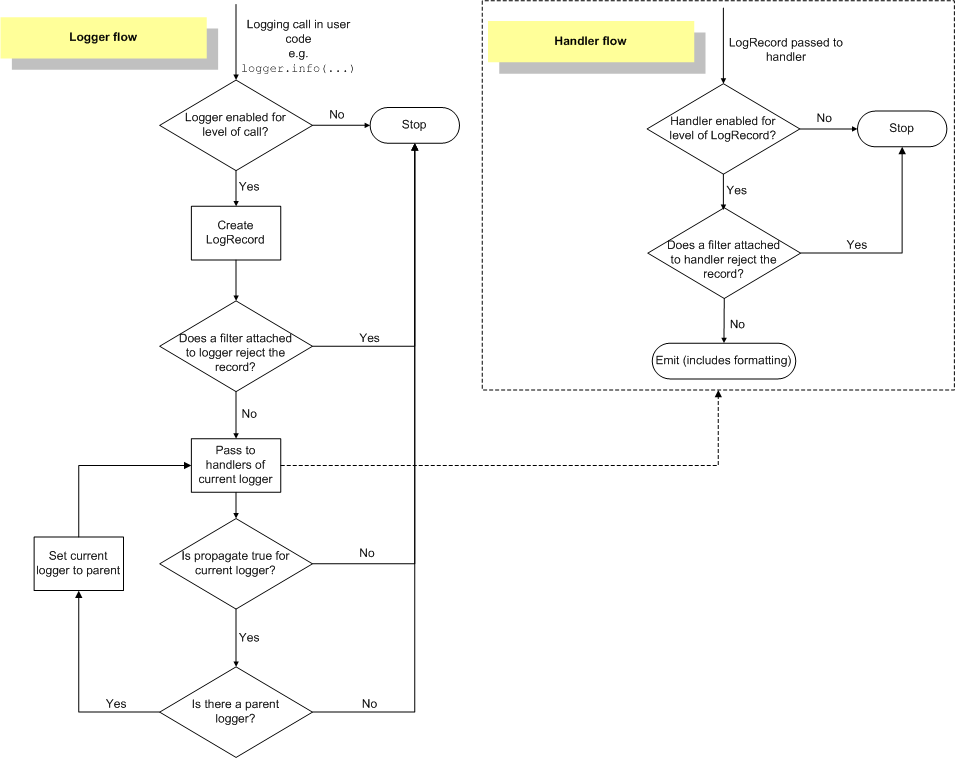

The logging flow can be summarized in the following diagram:

The logging module has six logging levels that are of incremental severity:

| Level | Numeric Value | Description |

|---|---|---|

| NOTSET | 0 | Default level when a new logger is created. Setting the root logger to NOTSET logs all messages. For other loggers, NOTSET messages are delegated to parent loggers until a level other than NOTSET is found. |

| DEBUG | 10 | Messages useful for debugging how an application is running. |

| INFO | 20 | Messages that log standard/expected events. |

| WARNING | 30 | Potentially negative events that may cause abnormal operation or otherwise require attention (such as the use of a deprecated API). |

| ERROR | 40 | High severity messages generally related to an operation failing that does not cause the program to crash or exit. |

| CRITICAL | 50 | Highest severity messages; may cause a program to crash or exit. |

To use the toool, a user needs to include the line:

import logging

to their Python code and then add statements to create log messages. Assume that the user doesn't set a specific logging level, the following code snippet shows how you can use all the five logging levels with the syntax:

logging.debug("A DEBUG Message")

logging.info("An INFO")

logging.warning("A WARNING")

logging.error("An ERROR")

logging.critical("A message of CRITICAL severity")The above statements will produce messages corresponding only to warning, error, and critical:

WARNING:root:A WARNING

ERROR:root:An ERROR

CRITICAL:root:A message of CRITICAL severity

This is because the default logging level is warning and the code will only print

messages that have levels equal to warning or above.

Note also that the default message format is:

<SEVERITY>:<NAME>:<MESSAGE>

where <NAME> is the name of our logger.

We can customized the messages by creating our own logger (instead of using root)

using a function call (logger = logging.getLogger()) at the top of the file we want to monitor.

- LoggerManager – configured with global comm (defaults to MPI_COMM_WORLD)

-

Logger – can be associated with a communicator (defaults to global)

- root_level: independent threshold for root process

-

Handler

- Lock – used to allow multiple processes to share access to a file

- MpiLock – uses one-sided MPI communication

- FileSystemLock – limited portability, but allows multi-executable sharing

- MpiFilter – used to restrict which processes’ messages are reported

- MpiFileHandler subclass

- Messages from each process are routed to separate file

- Lock – used to allow multiple processes to share access to a file

- MpiFormatter subclass: knows about rank and #PE’s for annotations

Subcommunicators: How to specify in run-time configuration file?

- Construct communicators prior to initializing framework

- Build dictionary of named communicators

- Pass as optional argument to framework configuration step

Simulation time: Enable annotation of messages with model’s internal representation of time/phase information

- Create a custom procedure that accesses model internal state and returns a dictionary of timerelated fields. E.g. {‘year’: 2000, ’month’: ’May’, ‘phase’: ‘quality control’}

- Set logger global procedure pointer

get_sim_time()to custom procedure.

subroutine sub_A()

use pflogger

integer :: i

class (Logger), pointer :: log

class (Logger), pointer :: plog

log => logging%get_logger('main.A')

plog => logging%get_logger('parallel.A')

call log%info('at line: %i3.3 in file: %a', 11,"complexMpi.F90")

call log%debug('inside sub_A')

call plog%info('at line: %i3.3 in file: %a', 13,"complexMpi.F90")

call plog%debug('inside sub_A')

call log%warning('empty procedure')

call log%info('at line: %i3.3 in file: %a', 17,"complexMpi.F90")

end subroutine sub_A

subroutine sub_B()

use pflogger

integer :: i

class (Logger), pointer :: log

class (Logger), pointer :: plog

log => logging%get_logger('main.B')

plog => logging%get_logger('parallel.B')

call log%info('at line: %i3.3 in file: %a', 33,"complexMpi.F90")

call log%debug('inside sub_B')

call plog%debug('inside sub_B')

call log%error('this procedure is empty as well')

call log%info('at line: %i3.3 in file: %a', 38,"complexMpi.F90")

end subroutine sub_B

program main

use pflogger

implicit none

integer :: ier

class (Logger), pointer :: log

integer :: rc

integer :: rank

call mpi_init(ier)

block

use mpi

call mpi_comm_rank(MPI_COMM_WORLD, rank, rc)

end block

call initialize() ! init logger

call logging%load_file('all_in_one.cfg')

log => logging%get_logger('main')

call log%info('at line: %i3.3 in file: %a', 63,"complexMpi.F90")

call sub_A()

call log%info('at line: %i3.3 in file: %a', 66,"complexMpi.F90")

call sub_B()

call log%info('at line: %i3.3 in file: %a', 69,"complexMpi.F90")

call mpi_finalize(ier)

end program mainschema_version: 1

locks:

mpi:

class: MpiLock

comm: MPI_COMM_WORLD

formatters:

basic:

class: Formatter

format: '%(name)a~: %(level_name)a~: %(message)a'

mpi:

class: MpiFormatter

format: '%(mpi_rank)i4.4~: %(name)~: %(level_name)a~: %(message)a'

comm: MPI_COMM_WORLD

column:

class: Formatter

format: '(%(i)i3.3,%(j)i3.3): %(level_name)'

handlers:

console:

class: streamhandler

formatter: basic

unit: OUTPUT_UNIT

level: WARNING

warnings:

class: FileHandler

filename: warnings.log

lock: mpi

level: WARNING

formatter: basic

errors:

class: StreamHandler

formatter: basic

unit: ERROR_UNIT

level: ERROR

mpi_shared:

class: FileHandler

filename: allPEs.log

formatter: mpi

comm: MPI_COMM_WORLD

lock: mpi

rank_keyword: rank

level: DEBUG

mpi_debug:

class: MpiFileHandler

formatter: basic

filename: debug_%(rank)i3.3~.log

comm: MPI_COMM_WORLD

rank_prefix: rank

level: DEBUG

root:

parallel: true

handlers: [warnings,errors]

level: WARNING

loggers:

main:

parallel: false

comm: MPI_COMM_WORLD

level: INFO

parallel:

parallel: true

handlers: [mpi_debug,mpi_shared]

lock: mpi

propagate: false

level: DEBUG

main.A:

level: WARNING

main.B:

level: INFO

parallel.A:

level: WARNING

parallel.B:

level: DEBUG

- Python Logging HOWTO

- Python Logging Cookbook

- Arfan Sharif, Python Logging Guide: The Basics, February 3, 2023.

- Thomas Clune and Carlos Cruz,

pFlogger: The Parallel Fortran LOgging UTility, CodeSE17, Denver, CO. - Thomas L. Clune and Carlos A. Cruz,

pFLogger: The parallel Fortran logging framework for HPC applications, Proceedings of the 1st International Workshop on Software Engineering for High Performance Computing in Computational and Data-enabled Science & Engineering, November 2017, Pages 18-21, https://doi.org/10.1145/3144763.3144764