Setting up and using Docker for software development - ECE-180D-WS-2024/Wiki-Knowledge-Base GitHub Wiki

Setting Up and Using Docker for Software Development

Docker is a powerful tool that has transformed software development with its innovative approach to creating, deploying, and running applications. By utilizing containerization technology, Docker enables developers to package an application with all of its dependencies into a standardized unit for software development, known as a container. This tutorial will guide you through the basics of Docker, its core concepts, installation on various operating systems, and practical usage for software development.

Understanding Docker Basics

Docker architecture diagram showing the relationship between containers, images, and the Docker engine.

Docker architecture diagram showing the relationship between containers, images, and the Docker engine.

Core Concepts

In the realm of Docker, the primary elements that play a pivotal role are Containers, Dockerfiles, and Docker images.

Containers stand out as lightweight, executable packages that encapsulate everything necessary to run an application, including the code, runtime, libraries, environment variables, and configuration files. This design principle distinguishes them from traditional virtual machines by not bundling an entire operating system but instead sharing the host system's kernel, significantly enhancing efficiency and reducing startup times.

Dockerfiles are essentially text documents that serve as a blueprint for Docker to build images. They contain a series of commands that a user might execute on the command line to assemble an image, providing a reproducible method for image creation.

Docker images are immutable templates from which containers are created. These read-only templates are constructed from Dockerfiles and can be stored and shared via a Docker registry, such as Docker Hub, facilitating the distribution and versioning of containers.

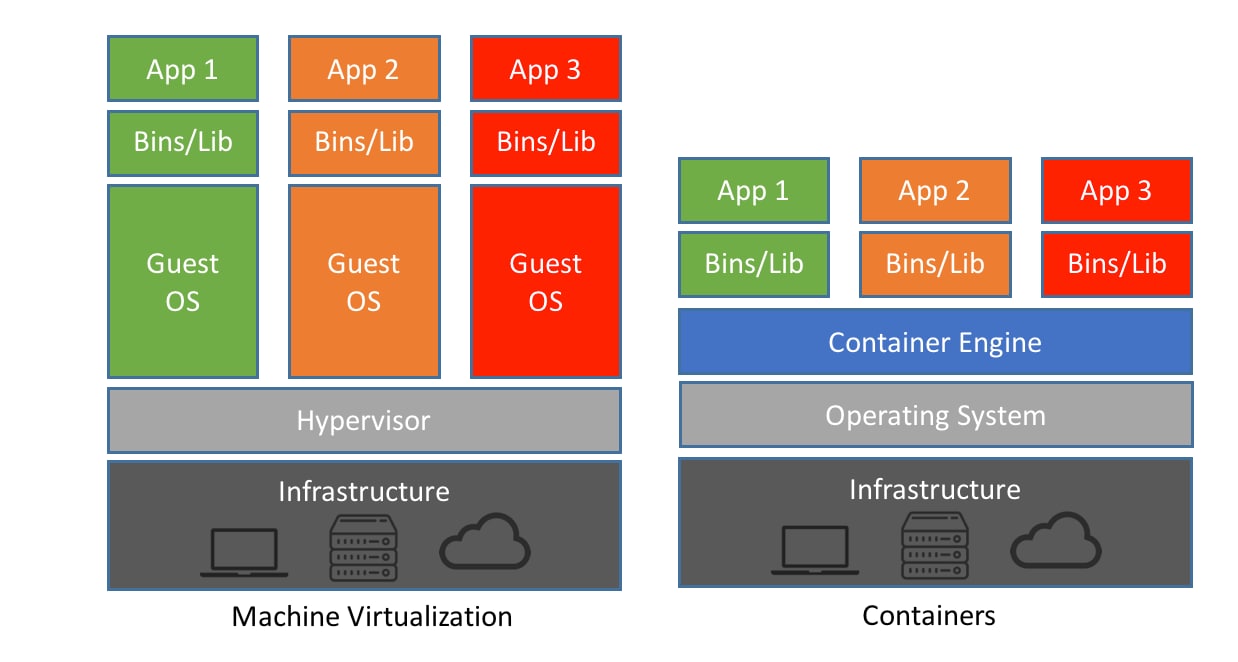

Containers vs. Traditional Virtualization

Comparison of container architecture with VM architecture.

Comparison of container architecture with VM architecture.

Containers are often compared to virtual machines (VMs), but they operate differently. VMs include a full copy of an operating system, a virtual copy of the hardware that the OS requires to run, and the application and its dependencies. Containers, on the other hand, share the host system's kernel and isolate the application and its dependencies within a container. This makes containers much more lightweight and efficient than VMs.

Docker in Cloud Environments

Docker, a leading container management service, is revolutionizing cloud computing by empowering the creation of applications staged in containerized environments. This technology that encapsulates applications with all required elements makes them portable and versatile across different cloud platforms (Ohri, 2021). Through automating application creation and deployment, Docker makes developers' and system administrators' lives easier and assists organizations in developing and running applications from any location. This standardized approach of application packaging and distributing standardizes the operations and simplifies infrastructure management (Ohri, 2021).

Some Docker Cloud Service Providers such as Amazon Web Services (AWS), Microsoft Azure, and A2 Hosting, provide cloud solutions to businesses, in which they can run Docker containers in a scalable and flexible environment. Such services provide the platforms for Docker apps to be deployed, managed and monitored using cloud capabilities that increase the efficiency and agility of organizations.

Among the benefits of using Docker in cloud environments, you have significant advantages in terms of speed, consistency, resource efficiency, density, and portability. AWS is one of the cloud providers that provides services with the sole aim of optimizing container deployment, which ensures the flexibility, scalability, and integration of containers into the development lifecycle (AWS, n.d.). AWS, in particular, provides several tailored container services:

- Amazon Elastic Container Service (ECS) is a completely managed container orchestration service that gives a rapid startup container without requiring managed servers or clusters, delivering both EC2 and serverless deployment options through AWS Fargate, an AWS service. (AWS, n.d.)

- Amazon Elastic Kubernetes Service (EKS) provides a managed Kubernetes environment that eases the migration of Kubernetes apps and automates tasks like patch management and node configuring (AWS, n.d.).

- AWS Fargate, the serverless compute engine, eliminates the requirement for a managed server or cluster and isolates each task or pod for increased security and efficiency (AWS, n.d.).

The Top Docker Cloud Service Providers include AWS Elastic Container Service, Google Kubernetes Engine (GKE), Microsoft Azure Container Service (ACS), IBM Cloud Container Service, and Docker Cloud. Even though these providers provide Docker services in a cloud-based environment, they have different features, such as pricing options, ease of use, and support. AWS is appreciated for its flexibility and cost-effective nature with the help of a large community as well as the comprehensive documentation, which makes troubleshooting easy for the users. Google Cloud Platform has a clear pricing, and Microsoft Azure is known for its easy-to-use interface (Shenoy, 2023).

Installing Docker

Windows and MacOS

For Windows and MacOS, Docker provides Docker Desktop, an easy-to-install application that includes Docker Engine, Docker CLI client, Docker Compose, Docker Content Trust, Kubernetes, and Credential Helper.

-

Windows: Download the installer from the Docker Hub and follow the installation wizard. Docker Desktop for Windows requires Microsoft Windows 10 Professional or Enterprise 64-bit. After installation, you might need to adjust your system's BIOS settings to enable virtualization.

-

MacOS: Download the installer from the Docker Hub and follow the installation guide. Docker Desktop for Mac requires macOS El Capitan 10.11 or newer. Post-installation, Docker will request permission to access your system's resources, like the file system and network.

Linux

Installing Docker on Linux varies by distribution. Here's a generic way to install Docker Engine:

sudo apt-get update

sudo apt-get install docker-ce docker-ce-cli containerd.io

After installation, you can verify that Docker is installed correctly by running docker --version.

Setting Up Your First Docker Environment

- Run a Test Container: To verify your installation, run a simple hello-world container:

docker run hello-world

- Create Your First Dockerfile: Create a file named Dockerfile and open it in your text editor. Add the following content to build a simple Python Flask application:

FROM python:3.8-slim

WORKDIR /app

COPY . /app

RUN pip install flask

CMD ["python", "app.py"]

Let's delve deeper into the Dockerfile above:

FROM python:3.8-slim: This line specifies the base image. Here, we're using a slim version of Python 3.8, which is lightweight and suitable for our Flask application.WORKDIR /app: Sets the working directory inside your container. Your application's directory structure will be replicated starting from this location.COPY . /app: Copies your application code into the container, at the location specified by WORKDIR.RUN pip install flask: Installs Flask inside the container. You can include a requirements.txt file for more complex applications and use RUN pip install -r requirements.txt.CMD ["python", "app.py"]: The default command to run when the container starts. Here, it's starting your Flask application.

- Build Your Docker Image: In the directory containing your Dockerfile, run:

docker build -t my-flask-app .

- Run Your Container: Start your application:

docker run -p 5000:5000 my-flask-app

Visit http://localhost:5000 in your web browser to see your application running.

Docker Compose and Container Orchestration

Docker Compose is a tool for defining and running multi-container Docker applications. With Compose, you use a YAML file to configure your application's services, networks, and volumes, and then create and start all the services from your configuration with a single command.

Example docker-compose.yml:

version: "3"

services:

web:

build: .

ports:

- "5000:5000"

redis:

image: "redis:alpine"

The docker-compose.yml file above defines two services: web and redis.

The web service builds the Dockerfile in the current directory and maps port 5000 on the host to port 5000 in the container, allowing you to access the Flask app via http://localhost:5000. The redis service uses a pre-built Redis image from Docker Hub, illustrating how easy it is to integrate third-party services into your application.

To start your application, run docker-compose up in the same directory as your docker-compose.yml file.

Example Scenario: E-commerce Application

Imagine you're developing an e-commerce platform. It consists of several services: a web application for the storefront, a product catalog service, an order management system, and a payment processing service. Each of these components can be encapsulated in its container, managed through Docker Compose.

Using Docker Compose, you can link these services together, manage their configurations, and ensure they're isolated yet able to communicate as required. For example, your docker-compose.yml file would define each of these services, their dependencies (like databases or caching services), and how they communicate over defined networks.

This setup simplifies development, as developers can replicate the production environment on their local machines with a single command: docker-compose up. It also streamlines CI/CD pipelines, as you can use the same Docker Compose configurations across development, testing, and production environments, ensuring consistency and reducing "it works on my machine" problems.

Best Practices for Development with Docker

Incorporating Docker into your development workflow can greatly enhance the efficiency, security, and maintainability of your Dockerized applications. Here are some refined best practices to consider:

-

Use

.dockerignorefiles: Similar to.gitignore, a.dockerignorefile prevents unnecessary files (such as temporary files, sensitive data, and development tools) from being included in your Docker image, resulting in cleaner builds and smaller image sizes. -

Minimize the number of layers: Structure your Dockerfile to combine related commands (e.g.,

RUN,COPY,ADD) into single layers where possible. This not only reduces the overall size of your image but also speeds up build times. -

Use multi-stage builds: Multi-stage builds allow you to separate the build-time environment from the runtime environment. Compile or build your application in a temporary stage with all necessary tools and then copy just the output to a slimmer production image. This approach reduces the final image size and decreases the surface area for potential security vulnerabilities.

-

Leverage the build cache: Maximize the use of Docker's layer caching by structuring your Dockerfile in a way that reuses cache layers. For example, instructions that change frequently (like copying source code) should come after instructions that change less often (like installing packages).

-

Optimize for security: Always prefer using official images and specific tags. Regularly scan your images for vulnerabilities with tools like Docker Scan, and avoid running containers as root to reduce risk. Implement user namespaces for an added layer of security.

-

Keep your images up to date: Ensure that you regularly update the base images in your Dockerfiles to include the latest security patches. This practice is essential for maintaining the security and reliability of your applications.

-

Utilize environment variables for configuration: Externalize your configurations using environment variables rather than hardcoding them into your images. This approach not only enhances security by keeping sensitive data out of the image but also increases the flexibility of your containers, as it allows you to change behavior without the need for a rebuild.

-

Embrace orchestration for complex applications: For applications that depend on multiple containers (like those using microservices architectures), leverage orchestration tools such as Docker Compose for development and Kubernetes for production. These tools facilitate managing container lifecycles, networking, scaling, and service discovery, making it easier to handle complex applications.

Implementing these practices can significantly improve the development, deployment, and operation of your Dockerized applications, making your development workflow more efficient and secure.

Real-World Applications

Docker's utility in real-world applications is vast and varied, touching upon multiple aspects of software development, deployment, and operation. It greatly facilitates the implementation of microservices architectures by encapsulating each service in its own container, thereby ensuring that each microservice has its isolated dependencies and environment. This encapsulation supports scalable and manageable architectures, allowing individual services to be updated, deployed, and scaled independently.

Furthermore, Docker plays a crucial role in Continuous Integration/Continuous Deployment (CI/CD) pipelines. By leveraging Docker, teams can ensure that applications are deployed consistently across all environments—from development to production. This consistency is achieved by using Docker containers to create uniform environments across different stages of the development lifecycle, integrating seamlessly with tools like Jenkins and GitHub Actions.

In development and testing, Docker enables teams to create environments that precisely mirror production settings, thereby facilitating early detection of environment-specific issues. This capability is invaluable in preventing the common problem of discrepancies between development, testing, and production environments, often summarized by the phrase "it works on my machine."

Moreover, Docker aids in configuration management by providing consistent runtime environments, thus addressing challenges related to environment disparity. The use of containers also supports rapid and scalable deployment strategies, which are essential for meeting the demands for high availability and scalability in modern applications. With tools like Kubernetes for orchestration, Docker enables rapid deployment cycles and efficient scaling, catering to the dynamic needs of contemporary software development.

The Future of Cloud Computing with Docker

Docker is going to remain an important player as cloud computing development continues. There are major trends for Docker in the years ahead, including increasing enterprise utilization due to its high efficiency in multi-cloud deployment handling, advancing security features that will better control data safety and compliance, and deeper integration with cloud technologies like Kubernetes (Raj, 2023). Hence, the multi-cloud strategy adopted by companies to prevent vendor lock-in and improve flexibility necessitates the use of Docker's containerization technology. Docker is ready to come up with new technologies, such as improved containers themselves, as well as more integrations into the cloud, which will lead the industry and help businesses to be competitive (Raj, 2023).

In a nutshell, the incorporation of Docker in cloud environments signifies a big leap forward regarding how companies build, control, and scale applications. Using Docker in conjunction with its very rich set of cloud provider services, businesses can get to new levels of efficiency and agility in their operations.

Conclusion

Docker's role in modern software development extends beyond simplifying the creation and deployment of applications. It is a catalyst for innovation, enabling more efficient development practices, supporting the adoption of microservices architectures, and enhancing the reliability of software across different environments. By mastering Docker, developers and operations teams can break down barriers, reduce development and deployment times, and focus on delivering value. Whether you are developing complex multi-container applications, working on a microservices architecture, or simply looking for a consistent development environment, Docker provides the tools and flexibility needed to meet these challenges head-on. Embracing Docker in your development workflow not only streamlines the process but also opens up new possibilities for collaboration, efficiency, and scalability in any project.

Sources

- Docker Official Documentation: docs.docker.com

- Docker Compose Overview: Docker Compose

- Best Practices for Writing Dockerfiles: Dockerfile Best Practices

- Docker and Microservices: A Match Made in Heaven: Microservices with Docker

- Integrating Docker with CI/CD Pipelines: CI/CD with Docker

- Docker Hub: The World’s Largest Library and Community for Container Images: Docker Hub

- Understanding Docker Networking Drivers and their use cases: Docker Networking

- Containers vs VMs: NetApp Blog

- AWS. (n.d.). Containers - overview of Amazon Web Services. https://docs.aws.amazon.com/whitepapers/latest/aws-overview/containers.html

- Ohri, A. (2021, February 23). Docker in cloud computing - A simple overview (2021). UNext. https://u-next.com/blogs/cloud-computing/docker-in-cloud-computing/

- Raj, V. (2023, May 6). The Future of Cloud Computing with docker: Predictions and trends for 2023 and beyond. Medium. https://vishalraj1.medium.com/the-future-of-cloud-computing-with-docker-predictions-and- trends-for-2023-and-beyond-22033d92646e

- Shenoy, P. (2023, June 1). Top 5 Docker Cloud Service Providers you should know about. Cloud Consulting Company: AWS, Azure and GCP Services. https://opsio.in/resource/blog/top-docker-cloud-service-providers-know/