Reading Notes - Cyber-JL/Wiki GitHub Wiki

Def: an object is a collection of data and associated behaviors.

For example, we can assume that apples go in barrels and oranges go in baskets. Now, we have four kinds of objects: apples, oranges, baskets, and barrels. In object-oriented modeling, the term used for kind of object is class. So, in technical terms, we now have four classes of objects.

What's the difference between an object and a class? Classes describe objects. They are like blueprints for creating an object. You might have three oranges sitting on the table in front of you. Each orange is a distinct object, but all three have the attributes and behaviors associated with one class: the general class of oranges.

The relationship between the four classes of objects in our inventory system can be described using a Unified Modeling Language (invariably referred to as UML).

an Orange is somehow associated with a Basket and that an Apple is also somehow associated with a Barrel. Association is the most basic way for two classes to be related.

Data typically represents the individual characteristics of a certain object. A class can define specific sets of characteristics that are shared by all objects of that class. Any specific object can have different data values for the given characteristics.

Attributes are frequently referred to as members or properties.

Attribute types are often primitives that are standard to most programming languages, such as integer, floating-point number, string, byte, or Boolean. However, they can also represent data structures such as lists, trees, or graphs, or most notably, other classes. This is one area where the design stage can overlap with the programming stage.

Behaviors are actions that can occur on an object. The behaviors that can be performed on a specific class of objects are called methods. At the programming level, methods are like functions in structured programming, but they magically have access to all the data associated with this object. Like functions, methods can also accept parameters and return values.

Parameters to a method are a list of objects that need to be passed into the method that is being called (the objects that are passed in from the calling object are usually referred to as arguments). These objects are used by the method to perform whatever behavior or task it is meant to do. Returned values are the results of that task.

Adding models and methods to individual objects allows us to create a system of interacting objects. Each object in the system is a member of a certain class. These classes specify what types of data the object can hold and what methods can be invoked on it. The data in each object can be in a different state from other objects of the same class, and each object may react to method calls differently because of the differences in state.

The key purpose of modeling an object in object-oriented design is to determine what the public interface of that object will be. The interface is the collection of attributes and methods that other objects can use to interact with that object.

This process of hiding the implementation, or functional details, of an object, is suitably called information hiding. It is also sometimes referred to as encapsulation, but encapsulation is actually a more all-encompassing term. Encapsulated data is not necessarily hidden.

Abstraction (the process of encapsulating information with separate public and private interfaces. The private interfaces can be subject to information hiding.) is another object-oriented concept related to encapsulation and information hiding. Simply put, abstraction means dealing with the level of detail that is most appropriate to a given task. It is the process of extracting a public interface from the inner details.

Example: A driver of a car needs to interact with steering, gas pedal, and brakes. The workings of the motor, drive train, and brake subsystem doesn't matter to the driver. A mechanic, on the other hand, works at a different level of abstraction, tuning the engine and bleeding the breaks.

most design patterns rely on two basic object-oriented principles known as composition and inheritance.

Composition is the act of collecting several objects together to create a new one. Composition is usually a good choice when one object is part of another object.

ou could argue that pieces are not part of the chess set because you could replace the pieces in a chess set with a different set of pieces. While this is unlikely or impossible in a computerized version of chess, it introduces us to aggregation.

Aggregation is almost exactly like composition. The difference is that aggregate objects can exist independently. It would be impossible for a position to be associated with a different chess board, so we say the board is composed of positions. But the pieces, which might exist independently of the chess set, are said to be in an aggregate relationship with that set.

Another way to differentiate between aggregation and composition is to think about the lifespan of the object. If the composite (outside) object controls when the related (inside) objects are created and destroyed, composition is most suitable. If the related object is created independently of the composite object, or can outlast that object, an aggregate relationship makes more sense. Also, keep in mind that composition is aggregation; aggregation is simply a more general form of composition. Any composite relationship is also an aggregate relationship, but not vice versa.

The is a relationship is formed by inheritance. Inheritance is the most famous, well-known, and over-used relationship in object-oriented programming. Inheritance is sort of like a family tree.

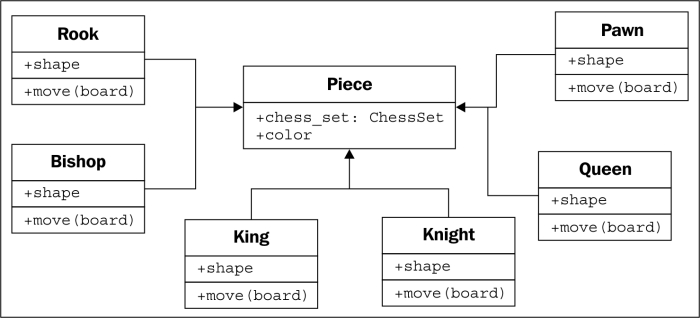

For example, there are 32 chess pieces in our chess set, but there are only six different types of pieces (pawns, rooks, bishops, knights, king, and queen), each of which behaves differently when it is moved. All of these classes of piece have properties, such as color and the chess set they are part of, but they also have unique shapes when drawn on the chess board, and make different moves. Let's see how the six types of pieces can inherit from a Piece class.

We actually know that all subclasses of the Piece class need to have a move method; otherwise, when the board tries to move the piece, it will get confused. It is possible that we would want to create a new version of the game of chess that has one additional piece (the wizard). Our current design allows us to design this piece without giving it a move method. The board would then choke when it asked the piece to move itself.

We can implement this by creating a dummy move method on the Piece class. The subclasses can then override this method with a more specific implementation. The default implementation might, for example, pop up an error message that says: That piece cannot be moved.

Overriding methods in subtypes allows very powerful object-oriented systems to be developed.

All we need to do is specify that the move method is required in any subclasses. This can be done by making Piece an abstract class with the move method declared abstract. Abstract methods basically say, "We demand this method exist in any non-abstract subclass, but we are declining to specify an implementation in this class."

Polymorphism is the ability to treat a class differently depending on which subclass is implemented.

Object-oriented design can also feature such multiple inheritance, which allows a subclass to inherit functionality from multiple parent classes. In practice, multiple inheritance can be a tricky business, and some programming languages (most notably, Java) strictly prohibit it. However, multiple inheritance can have its uses. Most often, it can be used to create objects that have two distinct sets of behaviors.

Classes in python look like:

class MyFirstClass:

pass

- class definition starts with

classfollowed by a name (of our choice) identifying the class and is terminated with a colon

We can set arbitrary attributes on an instantiated object using the dot notation:

class Point:

pass

p1 = Point()

p2 = Point()

p1.x = 5

p1.y = 4

p2.x = 3

p2.y = 6

print(p1.x, p1.y)

print(p2.x, p2.y)

returns:

5 4

3 6

This code creates an empty Point class with no data or behaviors. Then it creates two instances of that class and assigns each of those instances x and y coordinates to identify a point in two dimensions. All we need to do to assign a value to an attribute on an object is use the <object>.<attribute> = <value> syntax. This is sometimes referred to as dot notation.

Let's model a couple of actions on our Point class. We can start with a called reset that moves the point to the origin (the origin is the point where x and y are both zero).

class Point:

def reset(self):

self.x = 0

self.y = 0

p = Point()

p.reset()

print(p.x, p.y)

returns:

0 0

A method in Python is formatted identically to a function. It starts with the keyword def followed by a space and the name of the method. This is followed by a set of parentheses containing the parameter list (we'll discuss that self parameter in just a moment), and terminated with a colon. The next line is indented to contain the statements inside the method. These statements can be arbitrary Python code operating on the object itself and any parameters passed in as the method sees fit.

The one difference between methods and normal functions is that all methods have one required argument. This argument is conventionally named self.

The self argument to a method is simply a reference to the object that the method is being invoked on. We can access attributes and methods of that object as if it were any another object. This is exactly what we do inside the reset method when we set the x and y attributes of the self object.

Notice that when we call the p.reset() method, we do not have to pass the self argument into it. Python automatically takes care of this for us. It knows we're calling a method on the p object, so it automatically passes that object to the method.

We can include one that accepts another Point object as input and returns the distance between them:

import math

class Point:

def move(self, x, y):

self.x = x

self.y = y

def reset(self):

self.move(0, 0)

def calculate_distance(self, other_point):

return math.sqrt(

(self.x - other_point.x)**2 +

(self.y - other_point.y)**2)

# how to use it:

point1 = Point()

point2 = Point()

point1.reset()

point2.move(5,0)

print(point2.calculate_distance(point1))

assert (point2.calculate_distance(point1) ==

point1.calculate_distance(point2))

point1.move(3,4)

print(point1.calculate_distance(point2))

print(point1.calculate_distance(point1))

A lot has happened here. The class now has three methods. The move method accepts two arguments, x and y, and sets the values on the self object, much like the old reset method from the previous example. The old reset method now calls move, since a reset is just a move to a specific known location.

The calculate_distance method uses the not-too-complex Pythagorean theorem to calculate the distance between two points. I hope you understand the math (** means squared, and math.sqrt calculates a square root), but it's not a requirement for our current focus, learning how to write methods.

The sample code at the end of the preceding example shows how to call a method with arguments: simply include the arguments inside the parentheses, and use the same dot notation to access the method. The test code calls each method and prints the results on the console. The assert function is a simple test tool; the program will bail if the statement after assert is False (or zero, empty, or None). In this case, we use it to ensure that the distance is the same regardless of which point called the other point's calculate_distance method.

The Python initialization method is the same as any other method, except it has a special name, __init__. The leading and trailing double underscores mean this is a special method that the Python interpreter will treat as a special case.

Let's start with an initialization function on our Point class that requires the user to supply x and y coordinates when the Point object is instantiated:

class Point:

def __init__(self, x, y):

self.move(x, y)

def move(self, x, y):

self.x = x

self.y = y

def reset(self):

self.move(0, 0)

# Constructing a Point

point = Point(3, 5)

print(point.x, point.y)

Now, the point can never go without a y coordinate. If we try to construct a point without including the proper initialization parameters, it will fail with a not enough arguments error similar to the one we received earlier when we forgot the self argument.

What if we don't want to make those two arguments required?

use the same syntax Python functions use to provide default arguments. The keyword argument syntax appends an equals sign after each variable name. If the calling object does not provide this argument, then the default argument is used instead. The variables will still be available to the function, but they will have the values specified in the argument list. Here's an example:

class Point:

def __init__(self, x=0, y=0):

self.move(x, y)

The constructor function is called __new__ as opposed to __init__, and accepts exactly one argument; the class that is being constructed (it is called before the object is constructed, so there is no self argument). It also has to return the newly created object. This has interesting possibilities when it comes to the complicated art of metaprogramming, but is not very useful in day-to-day programming. In practice, you will rarely, if ever, need to use __new__ and __init__ will be sufficient.

- Modules are simply Python files, nothing more. The single file in our small program is a module. Two Python files are two modules. If we have two files in the same folder, we can load a class from one module for use in the other module.

The import statement is used for importing modules or specific classes or functions from modules.

ex1.

import database

db = database.Database()

# Do queries on db

ex2.

from database import Database

db = Database()

# Do queries on db

ex3.

from database import Database as DB

db = DB()

# Do queries on db

ex4.

from database import Database, Query

A package is a collection of modules in a folder. The name of the package is the name of the folder. All we need to do to tell Python that a folder is a package is place a (normally empty) file in the folder named __init__.py. If we forget this file, we won't be able to import modules from that folder.

Let's put our modules inside an ecommerce package in our working folder, which will also contain a main.py file to start the program. Let's additionally add another package in the ecommerce package for various payment options. The folder hierarchy will look like this:

parent_directory/

main.py

ecommerce/

__init__.py

database.py

products.py

payments/

__init__.py

square.py

stripe.py

Absolute imports specify the complete path to the module, function, or path we want to import. If we need access to the Product class inside the products module, we could use any of these syntaxes to do an absolute import:

import ecommerce.products

product = ecommerce.products.Product()

or

from ecommerce.products import Product

product = Product()

or

from ecommerce import products

product = products.Product()

The import statements use the period operator to separate packages or modules.

Relative imports are basically a way of saying find a class, function, or module as it is positioned relative to the current module. For example, if we are working in the products module and we want to import the Database class from the database module next to it, we could use a relative import:

from .database import Database

The period in front of database says "use the database module inside the current package".

If we were editing the paypal module inside the ecommerce.payments package, we would want to say "use the database package inside the parent package" instead. This is easily done with two periods, as shown here:

from ..database import Database

We can use more periods to go further up the hierarchy. Of course, we can also go down one side and back up the other. We don't have a deep enough example hierarchy to illustrate this properly, but the following would be a valid import if we had an ecommerce.contact package containing an email module and wanted to import the send_mail function into our paypal module:

from ..contact.email import send_mail

Inside any one module, we can specify variables, classes, or functions. They can be a handy way to store the global state without namespace conflicts. For example, we have been importing the Database class into various modules and then instantiating it, but it might make more sense to have only one database object globally available from the database module. The database module might look like this:

class Database:

# the database implementation

pass

database = Database()

Then we can use any of the import methods we've discussed to access the `database object, for example:

from ecommerce.database import database

A problem with the preceding class is that the database object is created immediately when the module is first imported, which is usually when the program starts up. This isn't always ideal since connecting to a database can take a while, slowing down startup, or the database connection information may not yet be available. We could delay creating the database until it is actually needed by calling an initialize_database function to create the module-level variable:

class Database:

# the database implementation

pass

database = None

def initialize_database():

global database

database = Database()

The global keyword tells Python that the database variable inside initialize_database is the module level one we just defined. If we had not specified the variable as global, Python would have created a new local variable that would be discarded when the method exits, leaving the module-level value unchanged.

As these two examples illustrate, all module-level code is executed immediately at the time it is imported. However, if it is inside a method or function, the function will be created, but its internal code will not be executed until the function is called. This can be a tricky thing for scripts (such as the main script in our e-commerce example) that perform execution. Often, we will write a program that does something useful, and then later find that we want to import a function or class from that module in a different program. However, as soon as we import it, any code at the module level is immediately executed. If we are not careful, we can end up running the first program when we really only meant to access a couple functions inside that module.

To solve this, we should always put our startup code in a function (conventionally, called main) and only execute that function when we know we are running the module as a script, but not when our code is being imported from a different script. But how do we know this?

class UsefulClass:

'''This class might be useful to other modules.'''

pass

def main():

'''creates a useful class and does something with it for our module.'''

useful = UsefulClass()

print(useful)

if __name__ == "__main__":

main()

prefix it with a double underscore, __. This will perform name mangling on the attribute in question. This basically means that the method can still be called by outside objects if they really want to do it, but it requires extra work and is a strong indicator that you demand that your attribute remains private. For example:

class SecretString:

'''A not-at-all secure way to store a secret string.'''

def __init__(self, plain_string, pass_phrase):

self.__plain_string = plain_string

self.__pass_phrase = pass_phrase

def decrypt(self, pass_phrase):

'''Only show the string if the pass_phrase is correct.'''

if pass_phrase == self.__pass_phrase:

return self.__plain_string

else:

return ''

All python classes are subclasses of the special class named object. This class allows python to treat all objects the same way.

If we don't explicitly inherit from, a different class, our classes will automatically inherit from object. However, we can openly state that our class derives from object.

class MySubClass(object):

pass

This is an example of inheritance.

If we don't explicitly provide a different superclass, python automatically inherits from object. A superclass/parent class is a class that is being inherited from. A subclass is a class that is inheriting from a superclass. The superclass is object, and MySubClass is the subclass.

The simplest use of inheritance is to add functionality to an existing class.start with a simple contact manager that tracks the name and e-mail address of several people. The contact class is responsible for maintaining a list of all contacts in a class variable, and for initializing the name and address for an individual contact:

class Contact:

all_contacts = []

def __init__(self, name, email):

self.name = name

self.email = email

Contact.all_contacts.append(self)

This example introduces us to class variables. The all_contacts list, because it is part of the class definition, is shared by all instances of this class. This means that there is only one Contact.all_contacts list, which we can access as Contact.all_contacts. Less obviously, we can also access it as self.all_contacts on any object instantiated from Contact. If the field can't be found on the object, then it will be found on the class and thus refer to the same single list.

This is a simple class that allows us to track a couple of pieces of data about each contact. But what if some of our contacts are also suppliers that we need to order supplies from? We could add an order method to the Contact class, but that would allow people to accidentally order things from contacts who are customers or family friends. Instead, let's create a new Supplier class that acts like our Contact class, but has an additional order method:

class Supplier(Contact):

def order(self, order):

print("If this were a real system we would send "

"'{}' order to '{}'".format(order, self.name))

An interesting use of inheritance is adding functionality to built-in classes. In the Contact class seen earlier, we are adding contacts to a list of all contacts. What if we also wanted to search that list by name? Well, we could add a method on the Contact class to search it, but it feels like this method actually belongs to the list itself. We can do this using inheritance:

class ContactList(list):

def search(self, name):

'''Return all contacts that contain the search value

in their name.'''

matching_contacts = []

for contact in self:

if name in contact.name:

matching_contacts.append(contact)

return matching_contacts

class Contact:

all_contacts = ContactList()

def __init__(self, name, email):

self.name = name

self.email = email

self.all_contacts.append(self)

Instead of instantiating a normal list as our class variable, we create a new ContactList class that extends the built-in list. Then, we instantiate this subclass as our all_contacts list. We can test the new search functionality as follows:

>>> c1 = Contact("John A", "[email protected]")

>>> c2 = Contact("John B", "[email protected]")

>>> c3 = Contact("Jenna C", "[email protected]")

>>> [c.name for c in Contact.all_contacts.search('John')]

['John A', 'John B']

To change the built-in syntax [] into something we can inherit from we creating an empty list with [] is actually a shorthand for creating an empty list using list(); the two syntaxes behave identically:

>>> [] == list()

True

In reality, the [] syntax is actually so-called syntax sugar that calls the list() constructor under the hood. The list data type is a class that we can extend. In fact, the list itself extends the object class:

>>> isinstance([], object)

True

We can extend the dict class, which is, similar to the list, the class that is constructed when using the {} syntax shorthand:

class LongNameDict(dict):

def longest_key(self):

longest = None

for key in self:

if not longest or len(key) > len(longest):

longest = key

return longest

Most built-in types can be similarly extended. Commonly extended built-ins are object, list, set, dict, file, and str. Numerical types such as int and float are also occasionally inherited from.

Our contact class allows only a name and an e-mail address. This may be sufficient for most contacts, but what if we want to add a phone number for our close friends? we can do this easily by just setting a phone attribute on the contact after it is constructed. But if we want to make this third variable available on initialization, we have to override init. Overriding means altering or replacing a method of the superclass with a new method (with the same name) in the subclass. No special syntax is needed to do this; the subclass's newly created method is automatically called instead of the superclass's method. For example:

class Friend(Contact):

def __init__(self, name, email, phone):

self.name = name

self.email = email

self.phone = phone

Any method can be overridden, not just init. Before we go on, however, we need to address some problems in this example. Our Contact and Friend classes have duplicate code to set up the name and email properties; this can make code maintenance complicated as we have to update the code in two or more places. More alarmingly, our Friend class is neglecting to add itself to the all_contacts list we have created on the Contact class.

What we really need is a way to execute the original init method on the Contact class. This is what the super function does; it returns the object as an instance of the parent class, allowing us to call the parent method directly:

class Friend(Contact):

def __init__(self, name, email, phone):

super().__init__(name, email)

self.phone = phone

This example first gets the instance of the parent object using super, and calls init on that object, passing in the expected arguments. It then does its own initialization, namely, setting the phone attribute.

A super() call can be made inside any method, not just init. This means all methods can be modified via overriding and calls to super. The call to super can also be made at any point in the method; we don't have to make the call as the first line in the method. For example, we may need to manipulate or validate incoming parameters before forwarding them to the superclass.

Multiple inheritance is a touchy subject. In principle, it's very simple: a subclass that inherits from more than one parent class is able to access functionality from both of them. In practice, this is less useful than it sounds and many expert programmers recommend against using it.

The simplest and most useful form of multiple inheritance is called a mixin. A mixin is generally a superclass that is not meant to exist on its own, but is meant to be inherited by some other class to provide extra functionality. For example, let's say we wanted to add functionality to our Contact class that allows sending an e-mail to self.email. Sending e-mail is a common task that we might want to use on many other classes. So, we can write a simple mixin class to do the e-mailing for us:

class MailSender:

def send_mail(self, message):

print("Sending mail to " + self.email)

# Add e-mail logic here

This class doesn't do anything special (in fact, it can barely function as a standalone class), but it does allow us to define a new class that describes both a Contact and a MailSender, using multiple inheritance:

class EmailableContact(Contact, MailSender):

pass

The Contact initializer is still adding the new contact to the all_contacts list, and the mixin is able to send mail to self.email so we know everything is working.

Multiple inheritance works all right when mixing methods from different classes, but it gets very messy when we have to call methods on the superclass. There are multiple superclasses. How do we know which one to call? How do we know what order to call them in?

Inheritance is also a viable solution, and that's what we want to explore. Let's add a new class that holds an address. We'll call this new class "AddressHolder" instead of "Address" because inheritance defines an is a relationship. It is not correct to say a "Friend" is an "Address" , but since a friend can have an "Address" , we can argue that a "Friend" is an "AddressHolder". Later, we could create other entities (companies, buildings) that also hold addresses. Here's our AddressHolder class:

class AddressHolder:

def __init__(self, street, city, state, code):

self.street = street

self.city = city

self.state = state

self.code = code

We can use multiple inheritance to add this new class as a parent of our existing Friend class. The tricky part is that we now have two parent init methods both of which need to be initialized. And they need to be initialized with different arguments. How do we do this? Well, we could start with a naive approach:

class Friend(Contact, AddressHolder):

def __init__(

self, name, email, phone,street, city, state, code):

Contact.__init__(self, name, email)

AddressHolder.__init__(self, street, city, state, code)

self.phone = phone

we directly call the init function on each of the superclasses and explicitly pass the self argument. This example technically works; we can access the different variables directly on the class. But there are a few problems.

First, it is possible for a superclass to go uninitialized if we neglect to explicitly call the initializer. This could cause hard-to-debug program crashes in common scenarios.

Second, and more sinister, is the possibility of a superclass being called multiple times because of the organization of the class hierarchy. Look at this inheritance diagram:

The init method from the Friend class first calls init on Contact, which implicitly initializes the object superclass (remember, all classes derive from object). Friend then calls init on AddressHolder, which implicitly initializes the object superclass again. This means the parent class has been set up twice. With the object class, that's relatively harmless, but in some situations, it could spell disaster. Imagine trying to connect to a database twice for every request!

Let's look at a second contrived example that illustrates this problem more clearly. Here we have a base class that has a method named call_me. Two subclasses override that method, and then another subclass extends both of these using multiple inheritance. This is called diamond inheritance because of the diamond shape of the class diagram:

Let's convert this diagram to code; this example shows when the methods are called:

class BaseClass:

num_base_calls = 0

def call_me(self):

print("Calling method on Base Class")

self.num_base_calls += 1

class LeftSubclass(BaseClass):

num_left_calls = 0

def call_me(self):

BaseClass.call_me(self)

print("Calling method on Left Subclass")

self.num_left_calls += 1

class RightSubclass(BaseClass):

num_right_calls = 0

def call_me(self):

BaseClass.call_me(self)

print("Calling method on Right Subclass")

self.num_right_calls += 1

class Subclass(LeftSubclass, RightSubclass):

num_sub_calls = 0

def call_me(self):

LeftSubclass.call_me(self)

RightSubclass.call_me(self)

print("Calling method on Subclass")

self.num_sub_calls += 1

This example simply ensures that each overridden call_me method directly calls the parent method with the same name. It lets us know each time a method is called by printing the information to the screen. It also updates a static variable on the class to show how many times it has been called. If we instantiate one Subclass object and call the method on it once, we get this output:

>>> s = Subclass()

>>> s.call_me()

Calling method on Base Class

Calling method on Left Subclass

Calling method on Base Class

Calling method on Right Subclass

Calling method on Subclass

>>> print(

... s.num_sub_calls,

... s.num_left_calls,

... s.num_right_calls,

... s.num_base_calls)

1 1 1 2

Thus we can clearly see the base class's call_me method being called twice. This could lead to some insidious bugs if that method is doing actual work—like depositing into a bank account—twice.

The thing to keep in mind with multiple inheritance is that we only want to call the "next" method in the class hierarchy, not the "parent" method. In fact, that next method may not be on a parent or ancestor of the current class. The super keyword comes to our rescue once again. Indeed, super was originally developed to make complicated forms of multiple inheritance possible. Here is the same code written using super:

class BaseClass:

num_base_calls = 0

def call_me(self):

print("Calling method on Base Class")

self.num_base_calls += 1

class LeftSubclass(BaseClass):

num_left_calls = 0

def call_me(self):

super().call_me()

print("Calling method on Left Subclass")

self.num_left_calls += 1

class RightSubclass(BaseClass):

num_right_calls = 0

def call_me(self):

super().call_me()

print("Calling method on Right Subclass")

self.num_right_calls += 1

class Subclass(LeftSubclass, RightSubclass):

num_sub_calls = 0

def call_me(self):

super().call_me()

print("Calling method on Subclass")

self.num_sub_calls += 1

The change is pretty minor; we simply replaced the naive direct calls with calls to super(), although the bottom subclass only calls super once rather than having to make the calls for both the left and right. The change is simple enough, but look at the difference when we execute it:

>>> s = Subclass()

>>> s.call_me()

Calling method on Base Class

Calling method on Left Subclass

Calling method on Base Class

Calling method on Right Subclass

Calling method on Subclass

>>> print(s.num_sub_calls, s.num_left_calls, s.num_right_calls, s.num_base_calls)

1 1 1 1

Looks good, our base method is only being called once. But what is super() actually doing here? Since the print statements are executed after the super calls, the printed output is in the order each method is actually executed. Let's look at the output from back to front to see who is calling what.

First, call_me of Subclass calls super().call_me(), which happens to refer to LeftSubclass.call_me(). The LeftSubclass.call_me() method then calls super().call_me(), but in this case, super() is referring to RightSubclass.call_me().

Pay particular attention to this: the super call is not calling the method on the superclass of LeftSubclass (which is BaseClass). Rather, it is calling RightSubclass, even though it is not a direct parent of LeftSubclass! This is the next method, not the parent method. RightSubclass then calls BaseClass and the super calls have ensured each method in the class hierarchy is executed once.

In the init method for Friend, we were originally calling init for both parent classes, with different sets of arguments:

Contact.__init__(self, name, email)

AddressHolder.__init__(self, street, city, state, code)

How can we manage different sets of arguments when using super? We don't necessarily know which class super is going to try to initialize first. Even if we did, we need a way to pass the "extra" arguments so that subsequent calls to super, on other subclasses, receive the right arguments. Specifically, if the first call to super passes the name and email arguments to Contact.init, and Contact.init then calls super, it needs to be able to pass the address-related arguments to the "next" method, which is AddressHolder.init.

This is a problem whenever we want to call superclass methods with the same name, but with different sets of arguments. Most often, the only time you would want to call a superclass with a completely different set of arguments is in init, as we're doing here. Even with regular methods, though, we may want to add optional parameters that only make sense to one subclass or set of subclasses.

Sadly, the only way to solve this problem is to plan for it from the beginning. We have to design our base class parameter lists to accept keyword arguments for any parameters that are not required by every subclass implementation. Finally, we must ensure the method freely accepts unexpected arguments and passes them on to its super call, in case they are necessary to later methods in the inheritance order.

Python's function parameter syntax provides all the tools we need to do this, but it makes the overall code look cumbersome. Have a look at the proper version of the Friend multiple inheritance code:

class Contact:

all_contacts = []

def __init__(self, name='', email='', **kwargs):

super().__init__(**kwargs)

self.name = name

self.email = email

self.all_contacts.append(self)

class AddressHolder:

def __init__(self, street='', city='', state='', code='', **kwargs):

super().__init__(**kwargs)

self.street = street

self.city = city

self.state = state

self.code = code

class Friend(Contact, AddressHolder):

def __init__(self, phone='', **kwargs):

super().__init__(**kwargs)

self.phone = phone

We've changed all arguments to keyword arguments by giving them an empty string as a default value. We've also ensured that a **kwargs parameter is included to capture any additional parameters that our particular method doesn't know what to do with. It passes these parameters up to the next class with the super call.

It is a fancy name describing a simple concept: different behaviors happen depending on which subclass is being used, without having to explicitly know what the subclass actually is. As an example, imagine a program that plays audio files. A media player might need to load an AudioFile object and then play it. We'd put a play() method on the object, which is responsible for decompressing or extracting the audio and routing it to the sound card and speakers. The act of playing an AudioFile could feasibly be as simple as:

audio_file.play()

We can use inheritance with polymorphism to simplify the design. Each type of file can be represented by a different subclass of AudioFile, for example, WavFile, MP3File. Each of these would have a play() method, but that method would be implemented differently for each file to ensure the correct extraction procedure is followed. The media player object would never need to know which subclass of AudioFile it is referring to; it just calls play() and polymorphically lets the object take care of the actual details of playing. Let's look at a quick skeleton showing how this might look:

class AudioFile:

def __init__(self, filename):

if not filename.endswith(self.ext):

raise Exception("Invalid file format")

self.filename = filename

class MP3File(AudioFile):

ext = "mp3"

def play(self):

print("playing {} as mp3".format(self.filename))

class WavFile(AudioFile):

ext = "wav"

def play(self):

print("playing {} as wav".format(self.filename))

class OggFile(AudioFile):

ext = "ogg"

def play(self):

print("playing {} as ogg".format(self.filename))

All audio files check to ensure that a valid extension was given upon initialization. But did you notice how the init method in the parent class is able to access the ext class variable from different subclasses? That's polymorphism at work. If the filename doesn't end with the correct name, it raises an exception (exceptions will be covered in detail in the next chapter). The fact that AudioFile doesn't actually store a reference to the ext variable doesn't stop it from being able to access it on the subclass.

In addition, each subclass of AudioFile implements play() in a different way (this example doesn't actually play the music; audio compression algorithms really deserve a separate book!). This is also polymorphism in action. The media player can use the exact same code to play a file, no matter what type it is; it doesn't care what subclass of AudioFile it is looking at. The details of decompressing the audio file are encapsulated.

While duck typing is useful, it is not always easy to tell in advance if a class is going to fulfill the protocol you require. Therefore, Python introduced the idea of abstract base classes. Abstract base classes, or ABCs, define a set of methods and properties that a class must implement in order to be considered a duck-type instance of that class. The class can extend the abstract base class itself in order to be used as an instance of that class, but it must supply all the appropriate methods.

In practice, it's rarely necessary to create new abstract base classes, but we may find occasions to implement instances of existing ABCs. We'll cover implementing ABCs first, and then briefly see how to create your own if you should ever need to.

Most of the abstract base classes that exist in the Python Standard Library live in the collections module. One of the simplest ones is the Container class. Let's inspect it in the Python interpreter to see what methods this class requires:

>>> from collections import Container

>>> Container.__abstractmethods__

frozenset(['__contains__'])

So, the Container class has exactly one abstract method that needs to be implemented, contains. You can issue help(Container.contains) to see what the function signature should look like:

Help on method __contains__ in module _abcoll:__contains__(self, x) unbound _abcoll.Container method

So, we see that contains needs to take a single argument. Unfortunately, the help file doesn't tell us much about what that argument should be, but it's pretty obvious from the name of the ABC and the single method it implements that this argument is the value the user is checking to see if the container holds.

This method is implemented by list, str, and dict to indicate whether or not a given value is in that data structure. However, we can also define a silly container that tells us whether a given value is in the set of odd integers:

class OddContainer:

def __contains__(self, x):

if not isinstance(x, int) or not x % 2:

return False

return True

Now, we can instantiate an OddContainer object and determine that, even though we did not extend Container, the class is a Container object:

>>> from collections import Container

>>> odd_container = OddContainer()

>>> isinstance(odd_container, Container)

True

>>> issubclass(OddContainer, Container)

True

And that is why duck typing is way more awesome than classical polymorphism. We can create is a relationships without the overhead of using inheritance (or worse, multiple inheritance).

It's not necessary to have an abstract base class to enable duck typing. However, imagine we were creating a media player with third-party plugins. It is advisable to create an abstract base class in this case to document what API the third-party plugins should provide. The abc module provides the tools you need to do this, but I'll warn you in advance, this requires some of Python's most arcane concepts:

import abc

class MediaLoader(metaclass=abc.ABCMeta):

@abc.abstractmethod

def play(self):

pass

@abc.abstractproperty

def ext(self):

pass

@classmethod

def __subclasshook__(cls, C):

if cls is MediaLoader:

attrs = set(dir(C))

if set(cls.__abstractmethods__) <= attrs:

return True

return NotImplemented

The first weird thing is the metaclass keyword argument that is passed into the class where you would normally see the list of parent classes. This is a rarely used construct from the mystic art of metaclass programming. We won't be covering metaclasses in this book, so all you need to know is that by assigning the ABCMeta metaclass, you are giving your class superpower (or at least superclass) abilities.

Next, we see the @abc.abstractmethod and @abc.abstractproperty constructs. These are Python decorators. For now, just know that by marking a method or property as being abstract, you are stating that any subclass of this class must implement that method or supply that property in order to be considered a proper member of the class.

See what happens if you implement subclasses that do or don't supply those properties:

>>> class Wav(MediaLoader):

... pass

...

>>> x = Wav()

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

TypeError: Can't instantiate abstract class Wav with abstract methods ext, play

>>> class Ogg(MediaLoader):

... ext = '.ogg'

... def play(self):

... pass

...

>>> o = Ogg()

Since the Wav class fails to implement the abstract attributes, it is not possible to instantiate that class. The class is still a legal abstract class, but you'd have to subclass it to actually do anything. The Ogg class supplies both attributes, so it instantiates cleanly.

Going back to the MediaLoader ABC, let's dissect that subclasshook method. It is basically saying that any class that supplies concrete implementations of all the abstract attributes of this ABC should be considered a subclass of MediaLoader, even if it doesn't actually inherit from the MediaLoader class.

@classmethod

def __subclasshook__(cls, C):

if cls is MediaLoader:

attrs = set(dir(C))

if set(cls.__abstractmethods__) <= attrs:

return True

return NotImplemented

@classmethod

This decorator marks the method as a class method. It essentially says that the method can be called on a class instead of an instantiated object:

def __subclasshook__(cls, C):

This defines the subclasshook class method. This special method is called by the Python interpreter to answer the question, Is the class C a subclass of this class?

if cls is MediaLoader:

We check to see if the method was called specifically on this class, rather than, say a subclass of this class. This prevents, for example, the Wav class from being thought of as a parent class of the Ogg class:

attrs = set(dir(C))

All this line does is get the set of methods and properties that the class has, including any parent classes in its class hierarchy:

if set(cls.__abstractmethods__) <= attrs:

This line uses set notation to see whether the set of abstract methods in this class have been supplied in the candidate class. Note that it doesn't check to see whether the methods have been implemented, just if they are there. Thus, it's possible for a class to be a subclass and yet still be an abstract class itself.

return True

If all the abstract methods have been supplied, then the candidate class is a subclass of this class and we return True. The method can legally return one of the three values: True, False, or NotImplemented. True and False indicate that the class is or is not definitively a subclass of this class:

return NotImplemented

If any of the conditionals have not been met (that is, the class is not MediaLoader or not all abstract methods have been supplied), then return NotImplemented. This tells the Python machinery to use the default mechanism (does the candidate class explicitly extend this class?) for subclass detection.

In principle, an exception is just an object. The one thing they all have in common is that they inherit from a built-in class called BaseException. These exception objects become special when they are handled inside the program's flow of control. When an exception occurs, everything that was supposed to happen doesn't happen, unless it was supposed to happen when an exception occurred.

For example, any time Python encounters a line in your program that it can't understand, it bails with SyntaxError, which is a type of exception. Here's a common one:

>>> print "hello world"

File "<stdin>", line 1

print "hello world"

^

SyntaxError: invalid syntax

This print statement was a valid command in Python 2 and previous versions, but in Python 3, because print is now a function, we have to enclose the arguments in parenthesis. So, if we type the preceding command into a Python 3 interpreter, we get the SyntaxError.

In addition to SyntaxError, some other common exceptions,are shown in the following example:

>>> x = 5 / 0

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

ZeroDivisionError: int division or modulo by zero

>>> lst = [1,2,3]

>>> print(lst[3])

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

IndexError: list index out of range

>>> lst + 2

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

TypeError: can only concatenate list (not "int") to list

>>> lst.add

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

AttributeError: 'list' object has no attribute 'add'

>>> d = {'a': 'hello'}

>>> d['b']

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

KeyError: 'b'

>>> print(this_is_not_a_var)

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

NameError: name 'this_is_not_a_var' is not defined

Sometimes these exceptions are indicators of something wrong in our program (in which case we would go to the indicated line number and fix it), but they also occur in legitimate situations. A ZeroDivisionError doesn't always mean we received an invalid input. It could also mean we have received a different input. The user may have entered a zero by mistake, or on purpose, or it may represent a legitimate value, such as an empty bank account or the age of a newborn child.

You may have noticed all the preceding built-in exceptions end with the name Error. In Python, the words error and exception are used almost interchangeably. Errors are sometimes considered more dire than exceptions, but they are dealt with in exactly the same way. Indeed, all the error classes in the preceding example have Exception (which extends BaseException) as their superclass.

class EvenOnly(list):

def append(self, integer):

if not isinstance(integer, int):

raise TypeError("Only integers can be added")

if integer % 2:

raise ValueError("Only even numbers can be added")

super().append(integer)

This class extends the list built-in. Objects in Python, and overrides the append method to check two conditions that ensure the item is an even integer. We first check if the input is an instance of the int type, and then use the modulus operator to ensure it is divisible by two. If either of the two conditions is not met, the raise keyword causes an exception to occur. The raise keyword is simply followed by the object being raised as an exception. In the preceding example, two objects are newly constructed from the built-in classes TypeError and ValueError.

>>> e = EvenOnly()

>>> e.append("a string")

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "even_integers.py", line 7, in add

raise TypeError("Only integers can be added")

TypeError: Only integers can be added

>>> e.append(3)

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "even_integers.py", line 9, in add

raise ValueError("Only even numbers can be added")

ValueError: Only even numbers can be added

>>> e.append(2)

Any lines that were supposed to run after the exception is raised are not executed, and unless the exception is dealt with, the program will exit with an error message. Take a look at this simple function:

def no_return():

print("I am about to raise an exception")

raise Exception("This is always raised")

print("This line will never execute")

return "I won't be returned"

If we execute this function, we see that the first print call is executed and then the exception is raised. The second print statement is never executed, and the return statement never executes either:

>>> no_return()

I am about to raise an exception

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "exception_quits.py", line 3, in no_return

raise Exception("This is always raised")

Exception: This is always raised

Furthermore, if we have a function that calls another function that raises an exception, nothing will be executed in the first function after the point where the second function was called. Raising an exception stops all execution right up through the function call stack until it is either handled or forces the interpreter to exit.

def call_exceptor():

print("call_exceptor starts here...")

no_return()

print("an exception was raised...")

print("...so these lines don't run")

When we call this function, we see that the first print statement executes, as well as the first line in the no_return function. But once the exception is raised, nothing else executes:

>>> call_exceptor()

call_exceptor starts here...

I am about to raise an exception

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "method_calls_excepting.py", line 9, in call_exceptor

no_return()

File "method_calls_excepting.py", line 3, in no_return

raise Exception("This is always raised")

Exception: This is always raised

We'll soon see that when the interpreter is not actually taking a shortcut and exiting immediately, we can react to and deal with the exception inside either method. Indeed, exceptions can be handled at any level after they are initially raised.

Look at the exception's output (called a traceback) from bottom to top, and notice how both methods are listed. Inside no_return, the exception is initially raised. Then, just above that, we see that inside call_exceptor, that pesky no_return function was called and the exception bubbled up to the calling method. From there, it went up one more level to the main interpreter, which, not knowing what else to do with it, gave up and printed a traceback.

Now let's look at the tail side of the exception coin. If we encounter an exception situation, how should our code react to or recover from it? We handle exceptions by wrapping any code that might throw one (whether it is exception code itself, or a call to any function or method that may have an exception raised inside it) inside a try...except clause. The most basic syntax looks like this:

try: no_return() except: print("I caught an exception") print("executed after the exception") If we run this simple script using our existing no_return function, which as we know very well, always throws an exception, we get this output:

I am about to raise an exception I caught an exception executed after the exception The no_return function happily informs us that it is about to raise an exception, but we fooled it and caught the exception. Once caught, we were able to clean up after ourselves (in this case, by outputting that we were handling the situation), and continue on our way, with no interference from that offensive function. The remainder of the code in the no_return function still went unexecuted, but the code that called the function was able to recover and continue.

Note the indentation around try and except. The try clause wraps any code that might throw an exception. The except clause is then back on the same indentation level as the try line. Any code to handle the exception is indented after the except clause. Then normal code resumes at the original indentation level.

The problem with the preceding code is that it will catch any type of exception. What if we were writing some code that could raise both a TypeError and a ZeroDivisionError? We might want to catch the ZeroDivisionError, but let the TypeError propagate to the console. Can you guess the syntax?

Here's a rather silly function that does just that:

def funny_division(divider): try: return 100 / divider except ZeroDivisionError: return "Zero is not a good idea!"

print(funny_division(0)) print(funny_division(50.0)) print(funny_division("hello")) The function is tested with print statements that show it behaving as expected:

Zero is not a good idea! 2.0 Traceback (most recent call last): File "catch_specific_exception.py", line 9, in print(funny_division("hello")) File "catch_specific_exception.py", line 3, in funny_division return 100 / anumber TypeError: unsupported operand type(s) for /: 'int' and 'str'. The first line of output shows that if we enter 0, we get properly mocked. If we call with a valid number (note that it's not an integer, but it's still a valid divisor), it operates correctly. Yet if we enter a string (you were wondering how to get a TypeError, weren't you?), it fails with an exception. If we had used an empty except clause that didn't specify a ZeroDivisionError, it would have accused us of dividing by zero when we sent it a string, which is not a proper behavior at all.

We can even catch two or more different exceptions and handle them with the same code. Here's an example that raises three different types of exception. It handles TypeError and ZeroDivisionError with the same exception handler, but it may also raise a ValueError if you supply the number 13:

def funny_division2(anumber): try: if anumber == 13: raise ValueError("13 is an unlucky number") return 100 / anumber except (ZeroDivisionError, TypeError): return "Enter a number other than zero"

for val in (0, "hello", 50.0, 13):

print("Testing {}:".format(val), end=" ")

print(funny_division2(val))

The for loop at the bottom loops over several test inputs and prints the results. If you're wondering about that end argument in the print statement, it just turns the default trailing newline into a space so that it's joined with the output from the next line. Here's a run of the program:

Testing 0: Enter a number other than zero Testing hello: Enter a number other than zero Testing 50.0: 2.0 Testing 13: Traceback (most recent call last): File "catch_multiple_exceptions.py", line 11, in print(funny_division2(val)) File "catch_multiple_exceptions.py", line 4, in funny_division2 raise ValueError("13 is an unlucky number") ValueError: 13 is an unlucky number The number 0 and the string are both caught by the except clause, and a suitable error message is printed. The exception from the number 13 is not caught because it is a ValueError, which was not included in the types of exceptions being handled. This is all well and good, but what if we want to catch different exceptions and do different things with them? Or maybe we want to do something with an exception and then allow it to continue to bubble up to the parent function, as if it had never been caught? We don't need any new syntax to deal with these cases. It's possible to stack except clauses, and only the first match will be executed. For the second question, the raise keyword, with no arguments, will reraise the last exception if we're already inside an exception handler. Observe in the following code:

def funny_division3(anumber): try: if anumber == 13: raise ValueError("13 is an unlucky number") return 100 / anumber except ZeroDivisionError: return "Enter a number other than zero" except TypeError: return "Enter a numerical value" except ValueError: print("No, No, not 13!") raise The last line reraises the ValueError, so after outputting No, No, not 13!, it will raise the exception again; we'll still get the original stack trace on the console.

If we stack exception clauses like we did in the preceding example, only the first matching clause will be run, even if more than one of them fits. How can more than one clause match? Remember that exceptions are objects, and can therefore be subclassed. As we'll see in the next section, most exceptions extend the Exception class (which is itself derived from BaseException). If we catch Exception before we catch TypeError, then only the Exception handler will be executed, because TypeError is an Exception by inheritance.

This can come in handy in cases where we want to handle some exceptions specifically, and then handle all remaining exceptions as a more general case. We can simply catch Exception after catching all the specific exceptions and handle the general case there.

Sometimes, when we catch an exception, we need a reference to the Exception object itself. This most often happens when we define our own exceptions with custom arguments, but can also be relevant with standard exceptions. Most exception classes accept a set of arguments in their constructor, and we might want to access those attributes in the exception handler. If we define our own exception class, we can even call custom methods on it when we catch it. The syntax for capturing an exception as a variable uses the as keyword:

try: raise ValueError("This is an argument") except ValueError as e: print("The exception arguments were", e.args) If we run this simple snippet, it prints out the string argument that we passed into ValueError upon initialization.

We've seen several variations on the syntax for handling exceptions, but we still don't know how to execute code regardless of whether or not an exception has occurred. We also can't specify code that should be executed only if an exception does not occur. Two more keywords, finally and else, can provide the missing pieces. Neither one takes any extra arguments. The following example randomly picks an exception to throw and raises it. Then some not-so-complicated exception handling code is run that illustrates the newly introduced syntax:

import random some_exceptions = [ValueError, TypeError, IndexError, None]

try: choice = random.choice(some_exceptions) print("raising {}".format(choice)) if choice: raise choice("An error") except ValueError: print("Caught a ValueError") except TypeError: print("Caught a TypeError") except Exception as e: print("Caught some other error: %s" % ( e.class.name)) else: print("This code called if there is no exception") finally: print("This cleanup code is always called") If we run this example—which illustrates almost every conceivable exception handling scenario—a few times, we'll get different output each time, depending on which exception random chooses. Here are some example runs:

$ python finally_and_else.py raising None This code called if there is no exception This cleanup code is always called

$ python finally_and_else.py raising <class 'TypeError'> Caught a TypeError This cleanup code is always called

$ python finally_and_else.py raising <class 'IndexError'> Caught some other error: IndexError This cleanup code is always called

$ python finally_and_else.py raising <class 'ValueError'> Caught a ValueError This cleanup code is always called Note how the print statement in the finally clause is executed no matter what happens. This is extremely useful when we need to perform certain tasks after our code has finished running (even if an exception has occurred). Some common examples include:

Cleaning up an open database connection Closing an open file Sending a closing handshake over the network The finally clause is also very important when we execute a return statement from inside a try clause. The finally handle will still be executed before the value is returned.

Also, pay attention to the output when no exception is raised: both the else and the finally clauses are executed. The else clause may seem redundant, as the code that should be executed only when no exception is raised could just be placed after the entire try...except block. The difference is that the else block will still be executed if an exception is caught and handled. We'll see more on this when we discuss using exceptions as flow control later.

Any of the except, else, and finally clauses can be omitted after a try block (although else by itself is invalid). If you include more than one, the except clauses must come first, then the else clause, with the finally clause at the end. The order of the except clauses normally goes from most specific to most generic.

We've already seen several of the most common built-in exceptions, and you'll probably encounter the rest over the course of your regular Python development. As we noticed earlier, most exceptions are subclasses of the Exception class. But this is not true of all exceptions. Exception itself actually inherits from a class called BaseException. In fact, all exceptions must extend the BaseException class or one of its subclasses.

There are two key exceptions, SystemExit and KeyboardInterrupt, that derive directly from BaseException instead of Exception. The SystemExit exception is raised whenever the program exits naturally, typically because we called the sys.exit function somewhere in our code (for example, when the user selected an exit menu item, clicked the "close" button on a window, or entered a command to shut down a server). The exception is designed to allow us to clean up code before the program ultimately exits, so we generally don't need to handle it explicitly (because cleanup code happens inside a finally clause).

If we do handle it, we would normally reraise the exception, since catching it would stop the program from exiting. There are, of course, situations where we might want to stop the program exiting, for example, if there are unsaved changes and we want to prompt the user if they really want to exit. Usually, if we handle SystemExit at all, it's because we want to do something special with it, or are anticipating it directly. We especially don't want it to be accidentally caught in generic clauses that catch all normal exceptions. This is why it derives directly from BaseException.

The KeyboardInterrupt exception is common in command-line programs. It is thrown when the user explicitly interrupts program execution with an OS-dependent key combination (normally, Ctrl + C). This is a standard way for the user to deliberately interrupt a running program, and like SystemExit, it should almost always respond by terminating the program. Also, like SystemExit, it should handle any cleanup tasks inside finally blocks.

Here is a class diagram that fully illustrates the exception hierarchy:

The exception hierarchy When we use the except: clause without specifying any type of exception, it will catch all subclasses of BaseException; which is to say, it will catch all exceptions, including the two special ones. Since we almost always want these to get special treatment, it is unwise to use the except: statement without arguments. If you want to catch all exceptions other than SystemExit and KeyboardInterrupt, explicitly catch Exception.

Furthermore, if you do want to catch all exceptions, I suggest using the syntax except BaseException: instead of a raw except:. This helps explicitly tell future readers of your code that you are intentionally handling the special case exceptions.

Often, when we want to raise an exception, we find that none of the built-in exceptions are suitable. Luckily, it's trivial to define new exceptions of our own. The name of the class is usually designed to communicate what went wrong, and we can provide arbitrary arguments in the initializer to include additional information.

All we have to do is inherit from the Exception class. We don't even have to add any content to the class! We can, of course, extend BaseException directly, but then it will not be caught by generic except Exception clauses.

Here's a simple exception we might use in a banking application:

class InvalidWithdrawal(Exception): pass

raise InvalidWithdrawal("You don't have $50 in your account") The last line illustrates how to raise the newly defined exception. We are able to pass an arbitrary number of arguments into the exception. Often a string message is used, but any object that might be useful in a later exception handler can be stored. The Exception.init method is designed to accept any arguments and store them as a tuple in an attribute named args. This makes exceptions easier to define without needing to override init.

Of course, if we do want to customize the initializer, we are free to do so. Here's an exception whose initializer accepts the current balance and the amount the user wanted to withdraw. In addition, it adds a method to calculate how overdrawn the request was:

class InvalidWithdrawal(Exception): def init(self, balance, amount): super().init("account doesn't have ${}".format( amount)) self.amount = amount self.balance = balance

def overage(self):

return self.amount - self.balance

raise InvalidWithdrawal(25, 50) The raise statement at the end illustrates how to construct this exception. As you can see, we can do anything with an exception that we would do with other objects. We could catch an exception and pass it around as a working object, although it is more common to include a reference to the working object as an attribute on an exception and pass that around instead.

Here's how we would handle an InvalidWithdrawal exception if one was raised:

try: raise InvalidWithdrawal(25, 50) except InvalidWithdrawal as e: print("I'm sorry, but your withdrawal is " "more than your balance by " "${}".format(e.overage())) Here we see a valid use of the as keyword. By convention, most Python coders name the exception variable e, although, as usual, you are free to call it ex, exception, or aunt_sally if you prefer.

There are many reasons for defining our own exceptions. It is often useful to add information to the exception or log it in some way. But the utility of custom exceptions truly comes to light when creating a framework, library, or API that is intended for access by other programmers. In that case, be careful to ensure your code is raising exceptions that make sense to the client programmer. They should be easy to handle and clearly describe what went on. The client programmer should easily see how to fix the error (if it reflects a bug in their code) or handle the exception (if it's a situation they need to be made aware of).

Exceptions aren't exceptional. Novice programmers tend to think of exceptions as only useful for exceptional circumstances. However, the definition of exceptional circumstances can be vague and subject to interpretation. Consider the following two functions:

def divide_with_exception(number, divisor): try: print("{} / {} = {}".format( number, divisor, number / divisor * 1.0)) except ZeroDivisionError: print("You can't divide by zero")

def divide_with_if(number, divisor): if divisor == 0: print("You can't divide by zero") else: print("{} / {} = {}".format( number, divisor, number / divisor * 1.0))

These two functions behave identically. If divisor is zero, an error message is printed; otherwise, a message printing the result of division is displayed. We could avoid a ZeroDivisionError ever being thrown by testing for it with an if statement. Similarly, we can avoid an IndexError by explicitly checking whether or not the parameter is within the confines of the list, and a KeyError by checking if the key is in a dictionary.

But we shouldn't do this. For one thing, we might write an if statement that checks whether or not the index is lower than the parameters of the list, but forget to check negative values.

Eventually, we would discover this and have to find all the places where we were checking code. But if we had simply caught the IndexError and handled it, our code would just work.

Python programmers tend to follow a model of Ask forgiveness rather than permission, which is to say, they execute code and then deal with anything that goes wrong. The alternative, to look before you leap, is generally frowned upon. There are a few reasons for this, but the main one is that it shouldn't be necessary to burn CPU cycles looking for an unusual situation that is not going to arise in the normal path through the code. Therefore, it is wise to use exceptions for exceptional circumstances, even if those circumstances are only a little bit exceptional. Taking this argument further, we can actually see that the exception syntax is also effective for flow control. Like an if statement, exceptions can be used for decision making, branching, and message passing.

Imagine an inventory application for a company that sells widgets and gadgets. When a customer makes a purchase, the item can either be available, in which case the item is removed from inventory and the number of items left is returned, or it might be out of stock. Now, being out of stock is a perfectly normal thing to happen in an inventory application. It is certainly not an exceptional circumstance. But what do we return if it's out of stock? A string saying out of stock? A negative number? In both cases, the calling method would have to check whether the return value is a positive integer or something else, to determine if it is out of stock. That seems a bit messy. Instead, we can raise OutOfStockException and use the try statement to direct program flow control. Make sense? In addition, we want to make sure we don't sell the same item to two different customers, or sell an item that isn't in stock yet. One way to facilitate this is to lock each type of item to ensure only one person can update it at a time. The user must lock the item, manipulate the item (purchase, add stock, count items left…), and then unlock the item. Here's an incomplete Inventory example with docstrings that describes what some of the methods should do:

class Inventory: def lock(self, item_type): '''Select the type of item that is going to be manipulated. This method will lock the item so nobody else can manipulate the inventory until it's returned. This prevents selling the same item to two different customers.''' pass

def unlock(self, item_type):

'''Release the given type so that other

customers can access it.'''

pass

def purchase(self, item_type):

'''If the item is not locked, raise an

exception. If the item_type does not exist,

raise an exception. If the item is currently

out of stock, raise an exception. If the item

is available, subtract one item and return

the number of items left.'''

pass

We could hand this object prototype to a developer and have them implement the methods to do exactly as they say while we work on the code that needs to make a purchase. We'll use Python's robust exception handling to consider different branches, depending on how the purchase was made:

item_type = 'widget' inv = Inventory() inv.lock(item_type) try: num_left = inv.purchase(item_type) except InvalidItemType: print("Sorry, we don't sell {}".format(item_type)) except OutOfStock: print("Sorry, that item is out of stock.") else: print("Purchase complete. There are " "{} {}s left".format(num_left, item_type)) finally: inv.unlock(item_type) Pay attention to how all the possible exception handling clauses are used to ensure the correct actions happen at the correct time. Even though OutOfStock is not a terribly exceptional circumstance, we are able to use an exception to handle it suitably. This same code could be written with an if...elif...else structure, but it wouldn't be as easy to read or maintain.

We can also use exceptions to pass messages between different methods. For example, if we wanted to inform the customer as to what date the item is expected to be in stock again, we could ensure our OutOfStock object requires a back_in_stock parameter when it is constructed. Then, when we handle the exception, we can check that value and provide additional information to the customer. The information attached to the object can be easily passed between two different parts of the program. The exception could even provide a method that instructs the inventory object to reorder or backorder an item.

Using exceptions for flow control can make for some handy program designs. The important thing to take from this discussion is that exceptions are not a bad thing that we should try to avoid. Having an exception occur does not mean that you should have prevented this exceptional circumstance from happening. Rather, it is just a powerful way to communicate information between two sections of code that may not be directly calling each other.

An object, however, has both data and behavior. There is no reason to add an extra level of abstraction if it doesn't help organize our code. On the other hand, the "obvious" need is not always self-evident.