AWS Graviton - AshokBhat/notes GitHub Wiki

Graviton series

- First-generation - a1

- Second-generation - m6g, c6g, r6g

- Third-generation - c7g

Graviton series

| Area | Graviton A1 | Graviton 2 | Graviton 3 |

|---|---|---|---|

| Instances | a1 | m6g, c6g, r6g | c7g |

| vCPUs | 16 | 64 | 64 |

| Process | 16nm | 7nm | 5nm |

| Transistors | ~5 billion | ~30 billion | ~55 billion |

| Core | [Cortex A72]]](/AshokBhat/notes/wiki/[[Neoverse-N1) | Neoverse V1 | |

| Memory channels | 8 DDR4-3200 channels | 8 DDR5-4800 channels | |

| L2 cache per core | 0.5MB? | 1MB | ? |

| L3 cache | 32MB | ? | |

| PCIe lanes | 64 PCIe4 | PCIe5 | |

| EBS bandwidth | 3.5Gbps | 18Gbps | |

| Networking bandwidth | 10Gbps | 25Gbps | |

| Memory | up to 32GB | up to 512GB |

Performance comparison

| Area | Graviton A1 | Graviton 2 |

|---|---|---|

| Overall | 1x | 7x |

| Per Core | 1x | 2x |

| Memory | 1x | 5x |

Graviton2 - EC2 instances

- m6g - For general purpose

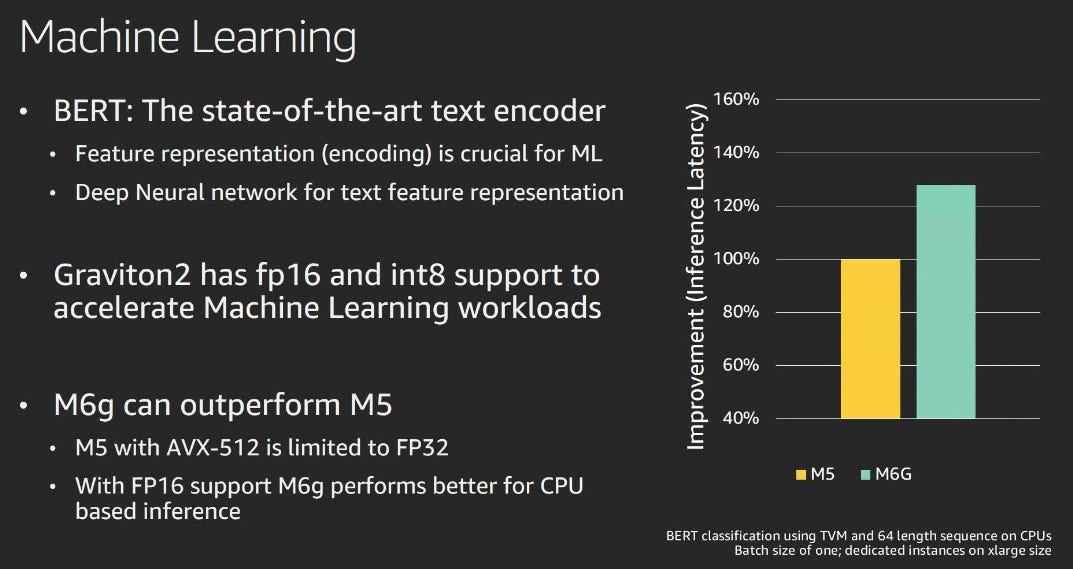

- c6g - For compute-optimized workloads - HPC, Machine learning inference

- r6g - For memory-optimized workloads

- t4g - For burstable general-purpose workloads

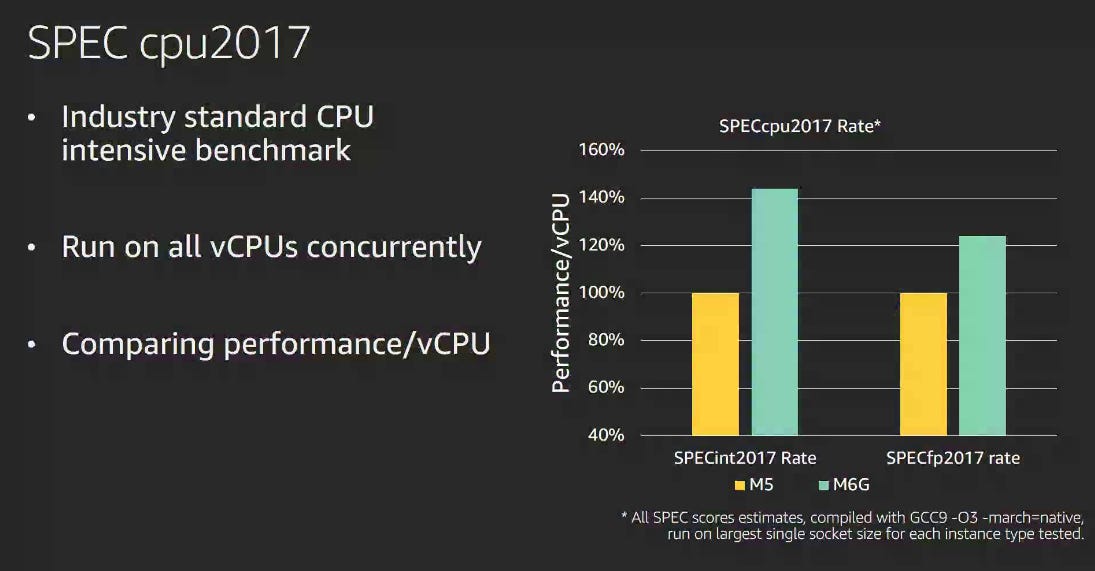

Graviton2 - Launch slides

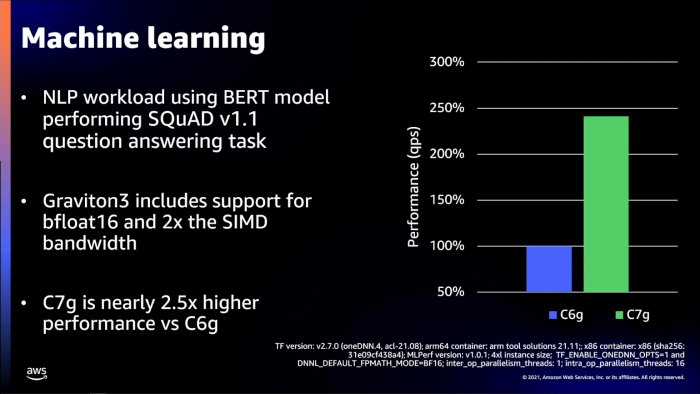

Graviton3 - Launch slides

See also

- [AWS SageMaker]] ](/AshokBhat/notes/wiki/[[AWS-Lambda)

- [Amazon EC2]] ](/AshokBhat/notes/wiki/[[AWS-Elastic-Inference)

- [AWS Graviton]] ](/AshokBhat/notes/wiki/[[AWS-Inferentia)

Other CPU vendors

- x86 CPU : [Intel]] ](/AshokBhat/notes/wiki/[[AMD)

- [Arm]] CPU: [[AWS]] ](/AshokBhat/notes/wiki/[Marvell) | [Fujitsu]] | Ampere