activation function - AshokBhat/ml GitHub Wiki

- Non-linear output function

output = f(input x weight + bias)- Significance: Introduces non-linearity into the network, which allows it to learn more complex patterns in the data.

-

Is activation function non-linear?

- Yes, they are non-linear functions

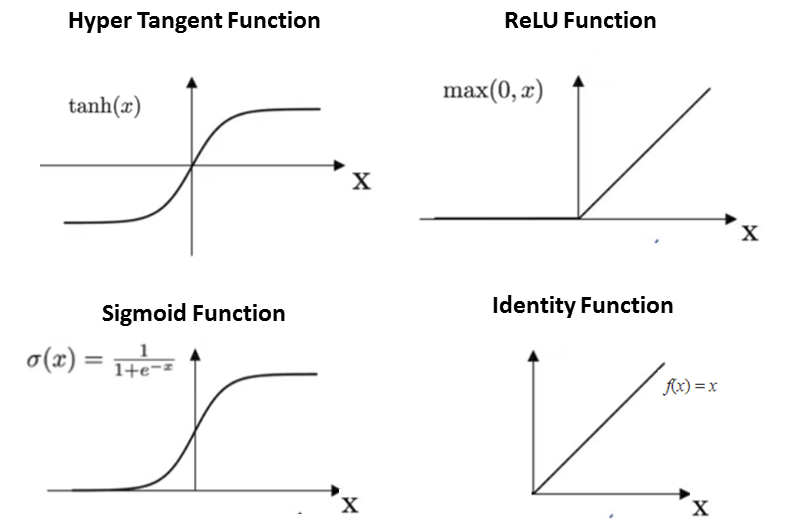

- What are common activation functions?

- Neuron | Neural network

- Activation function : ReLU | Softmax | Sigmoid | Tanh