RelU - AshokBhat/ml GitHub Wiki

- Activation function

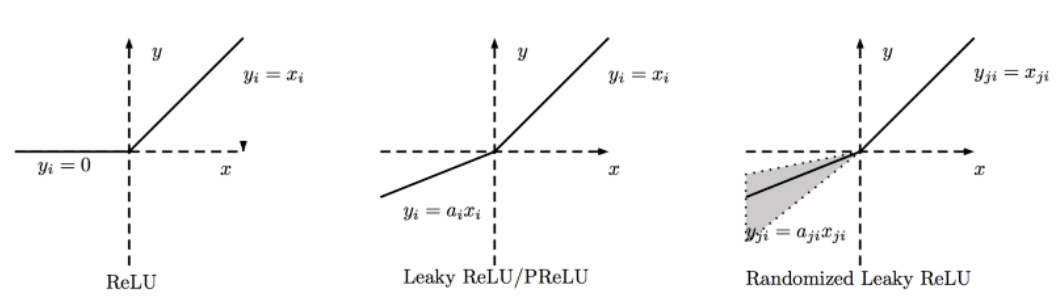

ReLU(x) = max(0, x)

-

Is ReLU linear?

- Non-linear.

-

What are the advantages of ReLU?

- Avoids and fixes the vanishing gradient problem.

- Less computationally expensive

- Activation function : ReLU | Softmax | Sigmoid | Tanh