PE1100N - ASUS-IPC/ASUS-IPC GitHub Wiki

PE1100N only support flash image via Ubuntu and doesn't support flash image via Windows.

-

System Requirement

• Linux Host Computer (x86 Ubuntu 18.04 and above)

• Micro USB cable

-

Enter Force Recovery Mode

For PE1100N Box, the Flash Port is number ❷, and the Force Recovery Button is number ❹.

Please perform the following steps to force the PE1100N to enter force recovery mode:

[PE1100N]

- Power off the PE1100N and remove the power cable.

- Connect Host Computer and PE1100N Flash Port (number ❷) with Micro USB cable.

- Press and hold the Force Recovery Button (number ❹).

- Connect the power cable and Power ON the PE1100N.

- After 3s release the Force Recovery Button.

[Host Computer]

On the Linux host PC, open a Terminal window and enter the command lsusb. If the returned content has one of the following outputs according to the Jetson SoM you use, then the board is in force recovery mode.

• For Orin NX 16GB: 0955:7323 NVidia Corp

• For Orin NX 8GB: 0955:7423 NVidia Corp

• For Orin Nano 8GB: 0955:7523 NVidia Corp

• For Orin Nano 4GB: 0955:7623 NVidia Corp

[Host Computer]

-

Extract BSP file on Host Computer.

BSP file example :

| System | File Name |

|---|---|

| PE1100N Orin Nano 4GB | PE1100N Orin Nano 4GB JetPack x.x.x Image Vx.x.x |

| PE1100N Orin Nano 8GB | PE1100N Orin Nano 8GB JetPack x.x.x Image Vx.x.x |

| PE1100N Orin NX 16GB | PE1100N Orin NX 16GB JetPack x.x.x Image Vx.x.x |

sudo tar xvpf PE1100N_JXANS_Orin-NX-16GB_JetPack-ssd-5.1.1_L4T-35.3.1_v0.1.3-debug-20230503.tar.gz

- Change folder

cd mfi_PE110xxxxxxxx

sudo ./tools/kernel_flash/l4t_initrd_flash.sh --erase-all --flash-only --showlogs --network usb0

- Flashing the image takes around 15 minutes.

[PE1100N]

After 15 minutes, PE1100N will auto reboot.

NOTE :

- Do not use a USB Hub between Host Computer and PE1100N.

- You can know the process of flashing image from “mfi_PE1100N-orin/initrdlog”.

- Installation Prerequisites

$ sudo apt update

$ sudo apt install -y apt-utils bc build-essential cpio curl \

device-tree-compiler expect gawk gdisk git kmod liblz4-tool libssl-dev \

locales parted python python3 qemu-user-static rsync \

software-properties-common sudo time tzdata udev unzip wget zip \

nfs-kernel-server uuid-runtime

- Download and prepare the Linux_for_Tegra source code

$ wget https://developer.nvidia.com/downloads/embedded/l4t/r36_release_v3.0/release/jetson_linux_r36.3.0_aarch64.tbz2

$ tar xf jetson_linux_r36.3.0_aarch64.tbz2

- Download and prepare sample root file system

$ wget https://developer.nvidia.com/downloads/embedded/l4t/r36_release_v3.0/release/tegra_linux_sample-root-filesystem_r36.3.0_aarch64.tbz2

$ sudo tar xpf tegra_linux_sample-root-filesystem_r36.3.0_aarch64.tbz2 -C Linux_for_Tegra/rootfs/

- Sync the source code for compiling

$ cd Linux_for_Tegra/source/

$ ./source_sync.sh -t jetson_36.3

If the following error occurs, please wait for Nvidia to provide a fix.

reference: https://forums.developer.nvidia.com/t/unable-to-connect-to-nv-tegra-nvidia-com/334353

-

Download the patch file for PE1100N

https://drive.google.com/file/d/12wBWT0YSfkDncw0JbIJu2hYsKAeRnzTu/view?usp=sharing

-

Overwrite the original source code by patch files

$ cd ../..

# Copy the PE1100N_r3630_patch.tar.gz to this folder.

$ tar zxf PE1100N_r3630_patch.tar.gz

$ sudo cp -r PE1100N_r3630_patch/Linux_for_Tegra/* Linux_for_Tegra/

For Nano 4G only

$ sudo cp PE1100N_r3630_patch/nano_4g_patch/chip_info.bin_bak Linux_for_Tegra/bootloader

- Apply necessary changes to rootfs

$ cd Linux_for_Tegra

$ sudo ./apply_binaries.sh

- Download and install the toolchain

$ wget https://developer.nvidia.com/downloads/embedded/l4t/r36_release_v3.0/toolchain/aarch64--glibc--stable-2022.08-1.tar.bz2

$ sudo tar xf aarch64--glibc--stable-2022.08-1.tar.bz2 -C /opt

$ rm aarch64--glibc--stable-2022.08-1.tar.bz2

- Build the kernel

$ cd source

$ export ARCH=arm64

$ export CROSS_COMPILE=/opt/aarch64--glibc--stable-2022.08-1/bin/aarch64-linux-

$ ./nvbuild_asus.sh

- Install new kernel dtbs and kernel modules

$ ./do_copy.sh

$ export INSTALL_MOD_PATH=`realpath ../rootfs/`

$ ./nvbuild_asus.sh -i

$ cd ..

- Pre-install JetPack SDK (optional)

$ sudo sed -i "s/<SOC>/t234/g" rootfs/etc/apt/sources.list.d/nvidia-l4t-apt-source.list

$ sudo cp /usr/bin/qemu-aarch64-static rootfs/usr/bin/

$ sudo mount --bind /sys ./rootfs/sys

$ sudo mount --bind /dev ./rootfs/dev

$ sudo mount --bind /dev/pts ./rootfs/dev/pts

$ sudo mount --bind /proc ./rootfs/proc

$ sudo chroot rootfs

# apt update

# apt install -y nvidia-jetpack

# apt clean

# exit

$ sudo umount ./rootfs/sys

$ sudo umount ./rootfs/dev/pts

$ sudo umount ./rootfs/dev

$ sudo umount ./rootfs/proc

$ sudo rm rootfs/usr/bin/qemu-aarch64-static

- Flash the device

- Orin NX 16G

$ sudo BOARDID=3767 BOARDSKU=0000 ./tools/kernel_flash/l4t_initrd_flash.sh --external-device nvme0n1p1 -c tools/kernel_flash/flash_l4t_t234_nvme.xml -p "-c bootloader/generic/cfg/flash_t234_qspi.xml" --showlogs --network usb0 PE1100N-orin internal

- Orin NX 8G

$ sudo BOARDID=3767 BOARDSKU=0001 ./tools/kernel_flash/l4t_initrd_flash.sh --external-device nvme0n1p1 -c tools/kernel_flash/flash_l4t_t234_nvme.xml -p "-c bootloader/generic/cfg/flash_t234_qspi.xml" --showlogs --network usb0 PE1100N-orin internal

- Orin Nano 8G

$ sudo BOARDID=3767 BOARDSKU=0003 ./tools/kernel_flash/l4t_initrd_flash.sh --external-device nvme0n1p1 -c tools/kernel_flash/flash_l4t_t234_nvme.xml -p "-c bootloader/generic/cfg/flash_t234_qspi.xml" --showlogs --network usb0 PE1100N-orin internal

- Orin Nano 4G

$ sudo BOARDID=3767 BOARDSKU=0004 ./tools/kernel_flash/l4t_initrd_flash.sh --external-device nvme0n1p1 -c tools/kernel_flash/flash_l4t_t234_nvme.xml -p "-c bootloader/generic/cfg/flash_t234_qspi.xml" --showlogs --network usb0 PE1100N-orin internal

$ sudo rm bootloader/chip_info.bin_bak

- Installing Prerequisites

$ sudo apt update

$ sudo apt install -y apt-utils bc build-essential cpio curl \

device-tree-compiler expect gawk gdisk git kmod liblz4-tool libssl-dev \

locales parted python3 qemu-user-static rsync \

software-properties-common sudo time tzdata udev unzip wget zip \

nfs-kernel-server uuid-runtime

- Download and prepare the Linux_for_Tegra source code

$ wget https://developer.nvidia.com/downloads/embedded/l4t/r36_release_v4.3/release/Jetson_Linux_r36.4.3_aarch64.tbz2

$ tar xf Jetson_Linux_r36.4.3_aarch64.tbz2

- Download and prepare sample root file system

$ wget https://developer.nvidia.com/downloads/embedded/l4t/r36_release_v4.3/release/Tegra_Linux_Sample-Root-Filesystem_r36.4.3_aarch64.tbz2

$ sudo tar xpf Tegra_Linux_Sample-Root-Filesystem_r36.4.3_aarch64.tbz2 -C Linux_for_Tegra/rootfs/

- Sync the source code for compiling

$ cd Linux_for_Tegra/source/

$ ./source_sync.sh -t jetson_36.4.3

-

If the following error occurs, please wait for Nvidia to fix it.

Reference: https://forums.developer.nvidia.com/t/unable-to-connect-to-nv-tegra-nvidia-com/334353

-

Download the patch file for PE1100N

https://drive.google.com/file/d/14sQxWWBMq40wqrE8U0H9MEENZv54nCK4/view?usp=sharing

Copy it in the same location as the Linux_for_Tegra folder.

-

Overwrite the original source code by patch files

$ cd ../..

$ tar zxf PE1100N_v2.0.11_patch.tar.gz

$ sudo cp -r PE1100N_v2.0.11_patch/Linux_for_Tegra/* Linux_for_Tegra/

- Apply necessary changes to rootfs

$ cd Linux_for_Tegra

$ sudo ./apply_binaries.sh

- Download and install the toolchain

$ wget https://developer.nvidia.com/downloads/embedded/l4t/r36_release_v3.0/toolchain/aarch64--glibc--stable-2022.08-1.tar.bz2

$ sudo tar xf aarch64--glibc--stable-2022.08-1.tar.bz2 -C /opt

$ rm aarch64--glibc--stable-2022.08-1.tar.bz2

- Build the kernel

$ cd source

$ export ARCH=arm64

$ export CROSS_COMPILE=/opt/aarch64--glibc--stable-2022.08-1/bin/aarch64-linux-

$ ./nvbuild_asus.sh

- Install new kernel dtbs and kernel modules

$ ./do_copy.sh

$ export INSTALL_MOD_PATH=`realpath ../rootfs/`

$ ./nvbuild_asus.sh -i

$ cd ..

- Pre-install JetPack SDK (optional)

$ sudo sed -i "s/<SOC>/t234/g" rootfs/etc/apt/sources.list.d/nvidia-l4t-apt-source.list

$ sudo cp /usr/bin/qemu-aarch64-static rootfs/usr/bin/

$ sudo mount --bind /sys ./rootfs/sys

$ sudo mount --bind /dev ./rootfs/dev

$ sudo mount --bind /dev/pts ./rootfs/dev/pts

$ sudo mount --bind /proc ./rootfs/proc

$ sudo chroot rootfs

# apt update

# apt install -y nvidia-jetpack

# apt clean

# exit

$ sudo umount ./rootfs/sys

$ sudo umount ./rootfs/dev/pts

$ sudo umount ./rootfs/dev

$ sudo umount ./rootfs/proc

$ sudo rm rootfs/usr/bin/qemu-aarch64-static

- Build the SSD image

$ sudo build.sh

- Select the SOM you are using.

- Build success will be shown as in the image below.

- The image will be generated in the mfi_PE1100N-orin folder and will be packaged as mfi_PE1100N-orin.tar.gz.

- Flash image to device

Installing the flash requirements

$ sudo ./tools/l4t_flash_prerequisites.sh

Set up the device in recovery mode, then flash the image.

$ cd mfi_PE1100N-orin

$ sudo ./tools/kernel_flash/l4t_initrd_flash.sh --erase-all --flash-only --showlogs --network usb0

- Please make sure your OS is Jetpack 6.0

$ cat /etc/nv_tegra_release

- Add the R36.4/JP 6.1

$ echo "deb https://repo.download.nvidia.com/jetson/common r36.4 main" | sudo tee -a /etc/apt/sources.list.d/nvidia-l4t-apt-source.list

$ echo "deb https://repo.download.nvidia.com/jetson/t234 r36.4 main" | sudo tee -a /etc/apt/sources.list.d/nvidia-l4t-apt-source.list

- Update the apt

$ sudo apt-get update

- Install Jetpack compute components

$ sudo apt-get install nvidia-jetpack

- Remove the R36.4/JP 6.1 repo to avoid installing nvidia-l4t bsp packages accidentally later

Important

Do not use apt-get upgrade because that will upgrade L4T packages too

For more details, please follow the link below from NVIDIA official website.

https://docs.nvidia.com/jetson/archives/jetpack-archived/jetpack-61/install-setup/index.html#upgradable-compute-stack

For Jetpack 5 & Jetpack 6

Prerequisites

a. Ubuntu Host PC 20.04 or 22.04

b. Micro USB cable

- Installing Prerequisites

$ sudo apt update

$ sudo apt install -y abootimg binfmt-support binutils cpio cpp device-tree-compiler dosfstools \

iproute2 iputils-ping lbzip2 libxml2-utils netcat nfs-kernel-server openssl python3-yaml qemu-user-static \

rsync sshpass udev uuid-runtime whois xmlstarlet zstd lz4 chrpath diffstat xxd wget bc

- Download and prepare the Nvidia Jetpack SDK

$ wget https://developer.nvidia.com/downloads/embedded/l4t/r36_release_v4.3/release/Jetson_Linux_r36.4.3_aarch64.tbz2

$ tar xf Jetson_Linux_r36.4.3_aarch64.tbz2

After extracting the Jetson_Linux_r36.4.3_aarch64.tbz2 file, you will see a Linux_for_Tegra folder.

- Download and prepare sample root file system

$ wget https://developer.nvidia.com/downloads/embedded/l4t/r36_release_v4.3/release/Tegra_Linux_Sample-Root-Filesystem_r36.4.3_aarch64.tbz2

$ sudo tar xpf Tegra_Linux_Sample-Root-Filesystem_r36.4.3_aarch64.tbz2 -C Linux_for_Tegra/rootfs/

-

Download the backup and restore patch file for PE1100N

https://drive.google.com/file/d/13ncd179x_MB6-fYrm05OT7KIpm20B4v_/view?usp=sharing

-

Place the file PE1100N_backup_restore_patch.tar in the same directory as the Linux_for_Tegra folder

-

Overwrite the original SDK by patch files

$ tar xf PE1100N_backup_restore_patch.tar

$ sudo cp -r PE1100N_backup_restore_patch/Linux_for_Tegra/* Linux_for_Tegra

- Applies the binaries to the rootfs.

$ cd Linux_for_Tegra

$ sudo ./ apply_binaries.sh

-

Create the backup image

a. Enter Force Recovery Mode on the PE1100N

- Power off the PE1100N and remove the power cable.

- Connect Host Computer and PE1100N Flash Port with Micro USB cable.

- Press and hold the Force Recovery Button.

- Connect the power cable and Power ON the PE1100N.

- After 3s release the Force Recovery Button.

b. Run this command from the Linux_for_Tegra folder on host PC:

$ sudo ./PE1100N_backup.shIf this command completes successfully, a backup image is stored in Linux_for_Tegra/tools/backup_restore/images.

c. After successful backup, you can manually reset the device.

-

Restore a PE1100N using a backup image

a. Enter Force Recovery Mode on the PE1100N

- Power off the PE1100N and remove the power cable.

- onnect Host Computer and PE1100N Flash Port with Micro USB cable.

- Press and hold the Force Recovery Button.

- Connect the power cable and Power ON the PE1100N.

- After 3s release the Force Recovery Button.

b. Run this command from the Linux_for_Tegra folder on host PC:

$ sudo ./PE1100N_restore.shc. After successful restore, you can manually reset the device.

Please refer to the table below for recommended OS versions and NVIDIA SDK versions for each image version

| PE1100N official release version | L4T | Ubuntu | JetPack | CUDA | DeepStream SDK | cuDNN | TensorRT |

|---|---|---|---|---|---|---|---|

| V1.0.0 | 35.3.1 | 20.04 | 5.1.1 | 11.4.19 | 6.2 | 8.6.0 | 8.5.2 |

| V1.1.1 | 35.4.1 | 20.04 | 5.1.2 | 11.4.19 | 6.3 | 8.6.0 | 8.5.2 |

| V2.0.5 | 36.3.0 | 22.04 | 6.0 | 12.2.2 | 6.4/7.0 | 8.9.4 | 8.6.2 |

| V2.0.6 | 36.3.0 | 22.04 | 6.0 | 12.2.2 | 6.4/7.0 | 8.9.4 | 8.6.2 |

| V2.0.7 | 36.4.0 | 22.04 | 6.1 | 12.6.10 | 7.1 | 9.3.0 | 10.3.0 |

| V2.0.11 | 36.4.3 | 22.04 | 6.2 | 12.6.10 | 7.1 | 9.3.0 | 10.3.0 |

NOTE : Please insert the SIM card and power on the device.

-

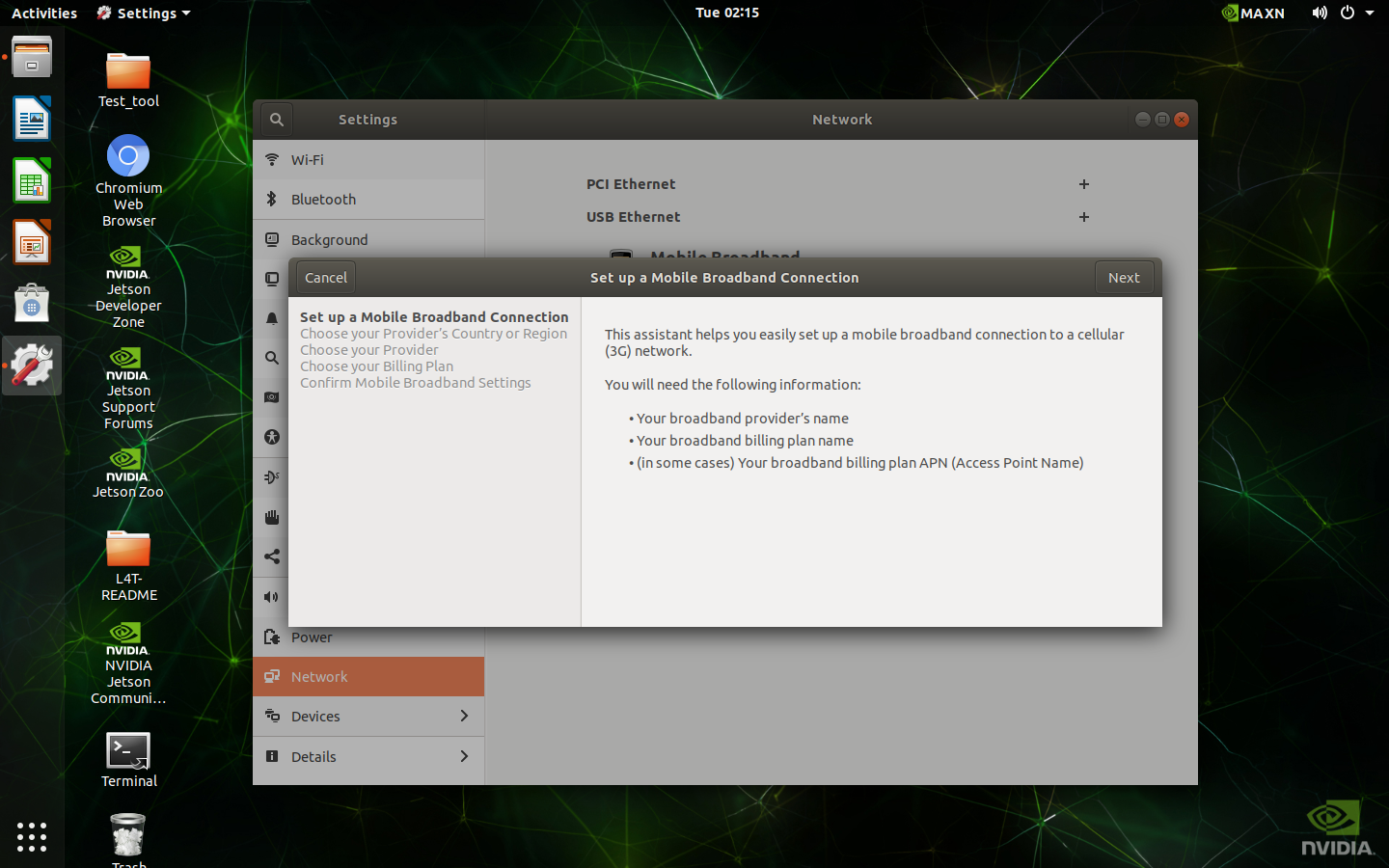

Click to open the settings bar at the top right side of the desktop.

-

Click Mobile Broadband Settings

-

Switch on the Mobile Broadband to enable LTE function

-

Open the Network bar below the IMEI

-

Select Add new connection

-

Click Next on the top right of the window

-

Select your country

-

Select your provider

-

Select your plan

-

Click Apply on the top right of the window

-

It will use this configure to connect to the internet automatically

-

The current signal strength also appear on the settings bar

-

Open Terminal on the desktop

-

Type command

sudo PE1100N-config, if it asks for a password, enter the password set by yourself. Press Enter.

-

Select Ok

-

Select 5. SIM Select SIM slot and press Enter

-

Select 1 SIM1 or 2 SIM2 and press Enter

-

Select Finish and press Enter

-

Select Yes and press Enter to reboot

-

Open Terminal on the desktop

-

Type command ‘

sudo PE1100N-config’, if it asks for a password, enter the password set by yourself. Press Enter.

-

Select Ok

-

Select 7. Configuration and press Enter

-

Check SIM slot and SIM state on the screen

- Open Terminal on the desktop

- Type command

sudo PE1100N-config, if it asks for a password, enter the password set by yourself. Press Enter.

- Select Ok

- Select the M.2, COM Mode, and SIM you want to switch

- Open Terminal on the desktop

- Type below command to switch DIO

Set one DO Value: sudo dio_out #DO_Num # Value

Get one DI Value: sudo dio_in #DO_Num

Get all DO Value: sudo dio_out

Get all DI Value: sudo dio_in

- Open Terminal on the desktop

- Below command are examples to turn on LED (Type Echo 1 or 0 for turning on/off LED)

ETH0 : sudo echo 1 > /sys/class/leds/eth0-led/brightness

ETH1: sudo echo 1 > /sys/class/leds/eth1-led/brightness

UART0: sudo echo 1 > /sys/class/leds/uart0-led/brightness

UART1: sudo echo 1 > /sys/class/leds/uart1-led/brightness

CAN: sudo echo 1 > /sys/class/leds/can-led/brightness

WIFI: sudo echo 1 > "/sys/class/leds/wifi-led/brightness

LTE: sudo echo 1 > /sys/class/leds/lte-led/brightness

- Open Terminal on the desktop

- Type below commands to disable kernel LED function

ETH0: sudo echo none > /sys/class/leds/eth0-led/trigger

ETH1: sudo echo none > /sys/class/leds/eth1-led/trigger

UART0: sudo echo none > /sys/class/leds/uart0-led/trigger

UART1: sudo echo none > /sys/class/leds/uart1-led/trigger

CAN: sudo echo none > /sys/class/leds/can-led/trigger

WIFI: sudo echo none > /sys/class/leds/wifi-led/trigger

LTE: sudo echo none > /sys/class/leds/lte-led/trigger

- Open Terminal on the desktop

- Type below commands to recover kernel LED function

ETH0: sudo echo eth0 > /sys/class/leds/eth0-led/trigger

ETH1: sudo echo eth1 > /sys/class/leds/eth1-led/trigger

UART0: sudo echo uart0 > /sys/class/leds/uart0-led/trigger

UART1: : sudo echo uart1 > /sys/class/leds/uart1-led/trigger

CAN: sudo echo can > /sys/class/leds/can-led/trigger

WIFI: sudo echo wifi > /sys/class/leds/wifi-led/trigger

LTE: sudo echo lte > /sys/class/leds/lte-led/trigger

ASUS API is a layer between hardware drivers and user applications. When a user application wants to access hardware resources (fan, watchdog, GPIO), it calls the ASUS API function and this function will use driver or system calls to perform the task.

Supported functions:

- Obtaining general information about the system

- System monitoring: thermal, voltage, fan, etc.

- Watchdog, GPIO control

- Power scheduling

- Connectivity management

ASUS API is compatible with EAPI specification and goes a step further to offer additional features.

ASUS API is released in the form of the dynamic-link library, so it can be easily used by an arbitrary application developed in C++, C# or higher programming languages. To use ASUS API, application developers only have to add the ASUS API library to their project.

Asus_API_Programming_Guide_v1.05_20240223.pdf

ASUS API (Library, Header files, Sample code)

For PE1100N Box, the Console Port is the red box.

-

Connect PE1100N console port to a PC with Micro USB cable.

Open Device Manager on PC, USB TO UART BRIDGE may appear in Device Manager.

- In this case, you need to download the driver (F81232_231115_whql.zip) and update it.

- Install and open the PuTTY, select Serial and input the COM* and Speed is 115200.

- Click the Open button on PuTTY and power on the PE1100N, and some boot logs will printed on PuTTY from PC.

- Install minicom on Linux PC

sudo apt update

sudo apt install minicom

-

Connect Micro USB cable to the console port on PE1100N

-

Check if /dev/ttyUSB0 exists

-

Execute the script to capture logs log_uart.zip

sudo ./log_uart.sh

If you want to exit minicom, press Ctrl+A, then press X.

- The log will be generated in the uart_logs folder.

NVIDIA Metropolis Microservices is a collection of software components designed for building intelligent video analytics applications. It's part of NVIDIA Metropolis, which is a platform aimed at transforming cities, enterprises, and industries into smart environments using computer vision, deep learning, and AI.

Prerequisites

- Jetson Orin devices with JetPack 6.0 OS

- Android phone or tablet

- Install Docker

sudo apt install -y docker.io

sudo usermod -aG docker $USER

newgrp docker

- Install nvidia-jetson-services

sudo apt update

sudo apt install -y nvidia-jetson-services

- Launch the Redis, Ingress and VST services

sudo systemctl start jetson-redis

sudo systemctl start jetson-ingress

sudo systemctl start jetson-vst

- Download Jetson Platform Services Reference Workflow & Resources

Follow the link below, and select on Version History tab

Choose 1.1.0 -> Download

https://catalog.ngc.nvidia.com/orgs/nvidia/teams/jps/resources/reference-workflow-and-resources

Note: 2.0.0 is for JetPack 6.1

Note: 2.0.0 is for JetPack 6.1

- Launch NVStreamer

unzip files.zip

rm files.zip

cd files

tar -xvf nvstreamer-1.1.0.tar.gz

cd nvstreamer

sudo docker compose -f compose_nvstreamer.yaml up -d --force-recreate

- Launch AI_NVR

cd <path of files>

tar -xvf ai_nvr-1.1.0.tar.gz

sudo cp ai_nvr/config/ai-nvr-nginx.conf /opt/nvidia/jetson/services/ingress/config/

cd ai_nvr

For Orin AGX:

sudo docker compose -f compose_agx.yaml up -d --force-recreate

For Orin NX 16G:

sudo docker compose -f compose_nx16.yaml up -d --force-recreate

For Orin NX 8G:

sudo docker compose -f compose_nx8.yaml up -d --force-recreate

For Orin Nano 8G/4G:

sudo docker compose -f compose_nano.yaml up -d --force-recreate

Docker containers will be created after executing the above instructions.

- Visit the NVStream Streamer Dashboard

http://localhost:31000/

Click "File Upload" and select sample_1080p_h264.mp4 from the files folder.

The file should appear on the dashboard once it has been uploaded successfully

Ex: The RTSP URL is rtsp://192.168.1.46:31555/nvstream/root/store/nvstreamer_videos/sample_1080p_h264.mp4

- Visit the VST Dashboard

http://localhost:30080/vst

Click “Sensor Management”, then add the device

- Enter the sample RTSP URL

- Enter the sample name

- Click submit

The sample video should be listed on the VST Dashboard.

-

Example for Metropolis analytics

A. Go to http://localhost:30080/vst

B. Click Live Streams.

C. Select the sample VST.

D. Expand Analytics.

E. Click “ROI”.

F. Add areas of interest, then click “Done”

G. Enter “People” on the dialog, then click Submit

H. Select People on “Select ROIs”

I. Click “Show”

J. Detected people will be displayed on the video screen.

-

Download and install NVIDIA Jetson Services on Android phone or tablet

Follow the link below, and there is an Android app that allows you to track events and create areas of interest to monitor

you can find it on Google Play as AI NVR.

https://play.google.com/store/apps/details?id=com.nvidia.ainvr

For JetPack 6.0, please download and install this version manually instead of the Play Store.

https://apkpure.com/cn/jetson-platform-services/com.nvidia.ainvr/downloading/1.0.2024060601

- Execute NVIDIA Jetson Services on Android device

A. Enter the JETSON_IP address, then press “Submit”

B. Press “Analytics”

C. This App allows you to track events and create areas of interest to monitor

Reference:

- Tutorial mmj

https://www.jetson-ai-lab.com/tutorial_mmj.html - NVIDIA Metropolis Microservices

https://developer.nvidia.com/metropolis-microservices - Overview of AI-NVR

https://www.nvidia.com/zh-tw/on-demand/session/other2024-mmj2/

- Flash images for Jetpack 6.0

Please follow the link below to flash the devices based on your models

For Orin Nano 8G:

https://dlcdnets.asus.com/pub/ASUS/mb/Embedded_IPC/PE1100N/PE1100N_JONAS_Orin-Nano-8GB_JetPack-ssd-6.0.0_L4T-36.3.0_v2.0.6-official-20240904.tar.gz?model=PE1100N

For Orin Nano 4G:

https://dlcdnets.asus.com/pub/ASUS/mb/Embedded_IPC/PE1100N/PE1100N_JONAS_Orin-Nano-4GB_JetPack-ssd-6.0.0_L4T-36.3.0_v2.0.6-official-20240905.tar.gz?model=PE1100N

- Install Jetson Stats and use jtop to monitor the system

sudo apt-get update

sudo apt-get install python3-pip

sudo pip3 install -U jetson-stats

sudo reboot

jtop

For more details, please follow the link below from NVIDIA developer website.

https://developer.nvidia.com/embedded/community/jetson-projects/jetson_stats

-

Upgrade the compute stack to JetPack 6.1

Please follow the link below to upgrade your device to Jetpack 6.1. If a release based on JetPack 6.1 is used, you can skip this.

https://github.com/ASUS-IPC/ASUS-IPC/wiki/PE1100N#66-upgrade-to-jetpack-61 -

Meet the prerequisites for the DeepStream SDK

sudo pip3 install meson

sudo pip3 install ninja

cd ~/Documents/

git clone https://github.com/GNOME/glib.git

cd glib

git checkout 2.76.6

meson build --prefix=/usr

ninja -C build/

cd build/

sudo ninja install

pkg-config --modversion glib-2.0

sudo apt install \

libssl3 \

libssl-dev \

libgstreamer1.0-0 \

gstreamer1.0-tools \

gstreamer1.0-plugins-good \

gstreamer1.0-plugins-bad \

gstreamer1.0-plugins-ugly \

gstreamer1.0-libav \

libgstreamer-plugins-base1.0-dev \

libgstrtspserver-1.0-0 \

libjansson4 \

libyaml-cpp-dev

For more details, please follow the link below from NVIDIA official website.

https://docs.nvidia.com/metropolis/deepstream/dev-guide/text/DS_Installation.html#prerequisites

- Install the DeepStream SDK

cd ~/Documents/

wget --content-disposition 'https://api.ngc.nvidia.com/v2/resources/nvidia/deepstream/versions/7.1/files/deepstream-7.1_7.1.0-1_arm64.deb' -O deepstream-7.1_7.1.0-1_arm64.deb

sudo apt-get install ./deepstream-7.1_7.1.0-1_arm64.deb

wget --content-disposition 'https://github.com/NVIDIA-AI-IOT/deepstream_python_apps/releases/download/v1.2.0/pyds-1.2.0-cp310-cp310-linux_aarch64.whl' -O pyds-1.2.0-cp310-cp310-linux_aarch64.whl

sudo pip3 install pyds-1.2.0-cp310-cp310-linux_aarch64.whl

sudo pip3 install cuda-python

Please follow the links below for more information

https://docs.nvidia.com/metropolis/deepstream/dev-guide/text/DS_Installation.html#install-the-deepstream-sdk

https://github.com/NVIDIA-AI-IOT/deepstream_python_apps/releases

https://github.com/NVIDIA-AI-IOT/deepstream_python_apps/tree/master/bindings

- Download the DeepStream Python samples

cd /opt/nvidia/deepstream/deepstream/sources/

sudo git clone https://github.com/NVIDIA-AI-IOT/deepstream_python_apps.git

Please follow the links below for more information

https://github.com/NVIDIA-AI-IOT/deepstream_python_apps

- Run the samples of the deepstream

Before runing the samples, please run the following commads to boost the clocks

sudo nvpmodel -m 0

sudo jetson_clocks

For more information, please refer to the following URL.

https://docs.nvidia.com/metropolis/deepstream/dev-guide/text/DS_Quickstart.html#boost-the-clocks

-

Run the sample deepstream-test2

This is a sample with 4-class object detection, tracking and attribute classification pipeline.

cd /opt/nvidia/deepstream/deepstream/sources/deepstream_python_apps/apps/deepstream-test2/

python3 deepstream_test_2.py /opt/nvidia/deepstream/deepstream/samples/streams/sample_720p.h264

For more information, please refer to the following URL.

https://github.com/NVIDIA-AI-IOT/deepstream_python_apps/tree/master/apps/deepstream-test2

-

Run the sample deepstream-test3 with single file input

This is a sample with multi-stream pipeline performing 4-class object detection and also supports triton inference server, no-display mode, file-loop and silent mode.

cd /opt/nvidia/deepstream/deepstream/sources/deepstream_python_apps/apps/deepstream-test3/

sudo python3 deepstream_test_3.py -i file:///opt/nvidia/deepstream/deepstream/samples/streams/sample_1080p_h264.mp4 -s --file-loop

- Run the sample deepstream-test3 with 4 file inputs

cd /opt/nvidia/deepstream/deepstream/sources/deepstream_python_apps/apps/deepstream-test3/

sudo python3 deepstream_test_3.py -i file:///opt/nvidia/deepstream/deepstream/samples/streams/sample_1080p_h264.mp4 file:///opt/nvidia/deepstream/deepstream/samples/streams/sample_1080p_h264.mp4 file:///opt/nvidia/deepstream/deepstream/samples/streams/sample_1080p_h264.mp4 file:///opt/nvidia/deepstream/deepstream/samples/streams/sample_1080p_h264.mp4 -s --file-loop

For more information, please refer to the following URL.

https://github.com/NVIDIA-AI-IOT/deepstream_python_apps/tree/master/apps/deepstream-test3

VLMs are multi modal models supporting images, video and text and using a combination of large language models and vision transformers. Based on this capability, they are able to support text prompts to query videos and images thereby enabling capabilities such as chatting with the video, and defining natural language based alerts.

Prerequisites

- Jetson Orin devices with JetPack 6.0 OS

- Android phone or tablet

- Install Docker

sudo apt install -y docker.io

sudo usermod -aG docker $USER

newgrp docker

- Install nvidia-jetson-services

sudo apt update

sudo apt install -y nvidia-jetson-services

- Launch the VST services

sudo systemctl start jetson-vst

- Download Jetson Platform Services Reference Workflow & Resources

Follow the link below, and select on Version History tab

Choose 1.1.0 -> Download

https://catalog.ngc.nvidia.com/orgs/nvidia/teams/jps/resources/reference-workflow-and-resources

Note: 2.0.0 is for JetPack 6.1

Note: 2.0.0 is for JetPack 6.1

- Launch NVStreamer

unzip files.zip

rm files.zip

cd files

tar -xvf nvstreamer-1.1.0.tar.gz

cd nvstreamer

sudo docker compose -f compose_nvstreamer.yaml up -d --force-recreate

- Visit the NVStream Streamer Dashboard

http://localhost:31000/

Click "File Upload" and select sample_1080p_h264.mp4 from the files folder.

The file should appear on the dashboard once it has been uploaded successfully

Ex: The RTSP URL is rtsp://192.168.1.46:31555/nvstream/root/store/nvstreamer_videos/sample_1080p_h264.mp4

- Visit the VST Dashboard

http://localhost:30080/vst

Click “Sensor Management”, then add the device

- Enter the sample RTSP URL

- Enter the sample name

- Click submit

The sample video should be listed on the VST Dashboard.

- Launch VLM

cd <path of files>

tar -xvf vlm-1.1.0.tar.gz

cd vlm/example_1

sudo cp config/vlm-nginx.conf /opt/nvidia/jetson/services/ingress/config

sudo cp config/prometheus.yml /opt/nvidia/jetson/services/monitoring/config/prometheus.yml

sudo cp config/rules.yml /opt/nvidia/jetson/services/monitoring/config/rules.yml

Start the foundation services and launch it.

sudo systemctl start jetson-ingress

sudo systemctl start jetson-monitoring

sudo systemctl start jetson-sys-monitoring

sudo systemctl start jetson-gpu-monitoring

sudo docker compose up -d

The first time the VLM service is launched, it will automatically download and quantize the VLM. This will take some time.

You can visit the page http://JETSON_IP:5015/v1/health, If the VLM is ready it will return {“detail”:”ready”}. If you are launching the VLM for the first time it will take some time to fully load.

Important If it shows {“detail”:”model loading”}, it means it is not ready yet.

- Interact with VLM Service

A. Control Stream Input via REST APIs

You can start by adding an RTSP stream for the VLM to use with the following curl command. This will use the POST method on the live-stream endpoint. Currently the VLM will only support 1 stream but in the future this API will allow for multi-stream support.

Replace 192.168.100.45 with your Jetson IP and replace the RTSP link with your RTSP link.

curl --location 'http://192.168.100.45:5010/api/v1/live-stream' \

--header 'Content-Type: application/json' \

--data '{

"liveStreamUrl":"rtsp://192.168.100.45:31554/nvstream/root/store/nvstreamer_videos/sample_1080p_h264.mp4"

}'

This request will return a unique stream ID that is used later to set alerts and ask follow up questions and remove the stream.

Ex: "id": "f16ffb43-95a4-44c5-bc5f-00cad33ddaf2"

B. Set Alerts

Alerts are questions that the VLM will continuously evaluate on the live stream input. For each alert rule set, the VLM will try to decide if it is True or False based on the most recent frame from of the live stream. These True and False states as determined by the VLM, are sent to a websocket and the jetson monitoring service.

When setting alerts, the alert rule should be phrased as a yes/no question. Such as “Is there people?”. The body of the request must also have the “id” field that corresponds to the stream ID that was returned when the RTSP stream was added.

curl --location 'http://192.168.100.45:5010/api/v1/alerts' \

--header 'Content-Type: application/json' \

--data '{

"alerts": ["is there people?"],

"id": "f16ffb43-95a4-44c5-bc5f-00cad33ddaf2"

}'

C. View RTSP Stream Output

Once a stream is added, it will be passed through to the output RTSP stream. You can view this stream at "rtsp://JETSON_IP:5011/out". Once a query or alert is added, we can view the VLM responses on this output stream.

Ex: You can view this RTSP stream by VLC

D. Delete the stream

To shut down the example you can first remove the stream using a DELETE method on the live-stream endpoint. Note the stream ID is added to the URL path for this.

curl --location --request DELETE 'http://192.168.100.45:5010/api/v1/live-stream/f16ffb43-95a4-44c5-bc5f-00cad33ddaf2'

This request will return “Stream removed successfully”

- Using VLM Service from Android phone

A. Download and install NVIDIA Jetson Services on Android phone or tablet

Follow the link below, and there is an Android app that allows you to track events and create areas of interest to monitor

you can find it on Google Play as AI NVR.

https://play.google.com/store/apps/details?id=com.nvidia.ainvr

For JetPack 6.0, please download and install this version manually instead of the Play Store.

https://apkpure.com/cn/jetson-platform-services/com.nvidia.ainvr/downloading/1.0.2024060601

B. Execute NVIDIA Jetson Services on Android device

Enter the JETSON_IP address, then press “Submit”

Press message button.

Now you can talk to the VLM service. For example, ask VLM what he sees on the screen.

Reference

Visual Language Models (VLM) with Jetson Platform Services

https://docs.nvidia.com/jetson/jps/inference-services/vlm.html

With ASUS IoT Cloud Console (AICC), you can manage PE1100N with simple steps, know the device information, online status, modify profile settings and deploy applications remotely, any modifications can be synced in real time.

Feature

-

Device Management

View online/offline information. Monitor CPU, memory, storage and usage information. Power off or restart devices.

-

APP Management

Install or uninstall apps. Monitor app information.

-

Sensor-data monitor

Data acquisition, visualization and monitoring.

-

Notifications

The real-time detection and alarm system allows managers to know the status of devices at anytime and anywhere.

-

Remote Command

The Command feature enables administrators to manage devices remotely, including troubleshooting and information gathering.

More information https://iot.asus.com/solutions/asus-iot-cloud-console-aicc/