Head_Report - DarshanNalwal/hello_world GitHub Wiki

Index

Phase 1 Literature survey

-

Introduction

Vision is perhaps the most important sense in human beings, and it is also a perceptual modality of crucial importance for building artificial creatures. In the human brain, visual processing starts early in the eye, and is then roughly organized according to functional processing areas in the occipital lobe. Fundamental problems that remain to be solved in several fields, such as

-

Computer Vision: object segmentation and recognition;event/goal/action identification; scene recognition

-

Robotics: Robot tasks involving differentiated actions;learning and executing tasks without initial manual setup

-

Cognitive Science and AI: understanding and creation of grounded knowledge structures, situated in the world

-

Neurosciences: a more profound understanding of biological brains and their macro and micro structures

-

-

Research

-

DESIGN AND SIMULATION OF NEW LOW COST HUMANOID ROBOT

-

INTRODUCTION

- CALUMA stands for CAssino Low Cost HUMAnoid Robot

- The maximum dimensions of the CALUMA design are 962 mm height, 839 mm width and 413 mm depth

- PLC is used to to control actuators

- 14 actuators are used to achieve desired Degrees Of Freedom

-

HEAD MODULE

- Consists of Telescopic Manipulator with 2-DOF

- DC motor actuates pitch DOF of neck

- Linear actuators actuates the up and down DOF of head

- Head sub-system is a combination of serial telescopic manipulator + parallel manipulator

- The parallel manipulator helps in attaching head to the trunk module

- Commercial web-cam is used for vision capability, it is installed as end-effector on telescopic manipulator

- Range of actuators = -90mm to 90mm

- Dynamic Characteristics of sub-system(head) Weight = 7.24N ,Ixx=2.82E-3 kg-m^2,Iyy=2.42E-3 kg-m^2,Izz=3.78E-4 kg-m^2

-

VISION SYSTEM

- Input from camera [obstacles] are categorized as

- Mountable 2. Unsurmountable 3. Step

- The obstacle detection system is evaluated by ViGWaM(Vision Guided Walking Machine) emulation environment

- Vision system acquires information for collision-free and goal-oriented locomotion

- Installing cameras with different zooms on one camera head for precise calssification

- Height = 1.8 m,Weight = 40 kg , DOF =17 ,Maximum walking speed = 2km/h , step length = 45cm

-

PERFORMANCE OF MOTION CONTROL BOARD

-

Maximum sampling rate that can be achieved

- For sufficient minimal angle resolution 16-bit representation is used to index 360� workspace

- With PD position control loop for 4 motors, time required to read actual position of motors of external pulse decoders,compute,apply new control signal=1300us USB communication = 240us In USB protocol packets can be sent only by every 4ms Therefore,Overall sampling rate for position control loop is 250Hz

-

-

-

-

Low level vision processing

-

Cognitive Issues

Transformation of visual representations starts at the very basic input sensor - in the eye - by having light separated into gray-scale tones by rod receptors, and in the color visible spectrum by cones (Goldstein, 1996). The brain's primary visual cortex receives then the visual input from the eye. These low-level visual brain structures change the visual input representation yielding more organized percepts. Such changes can be modelled through mathematical tools like Daubechies-Wavelet or Fourier transforms for spectral analysis. Multi-scale representations fine-tune such organization.

Edge detection and spectral analysis are known to occur on the hypercolumns of V1 occipital brain's area. Cells at V1 have the smallest receptive fields. Vision and action are also highly interconnected at low visual processing levels in the human brain.

-

Spectral Analysis

-

Spectral co-efficient of an image are often used in computer vision for segregation or for coding contextual information of a scene.

-

The selection of both the spatial and frequency resolutions poses interesting problems, as started by Heisenbergs Uncertainity Principle.

-

Short-Time Fourier Transform

The Fourier transform is one of the techniques often applied to analyze a signal into its frequency components . A variant of it is the Short- Time Fourier transform (STFT). This transform divides the signal into segments. The signal truncated by each of these segments is convolved by a window such as a rectangular or Hamming window - and the Fourier Transform is then applied to it. When applied over different window sizes, this transform obtains spatial information concerning the distribution of the spectral components.

-

Wavelets

- Another method widely used to extract the spatial distribution of spectral components is the Wavelet transform.

- Features: Texture segmentation,image/video encoding and image noise reduction.

- Distinct feature of wavelet encoding is that reconstruction of the original image is possible without loosing information.

- The Continuous Wavelet Transform (CWT) consist of convolving a signal with a mathematical function at several scales.

- CWT drawback: Not suited for discrete computation.

- Discrete Wavelet Transform (DWT) overcomes the quantization problems and allows fast computation of the transform for the digitized signals.

- Features: Provides perfect signal reconstruction, DWT produces sparse results, which is desired for image compression.

-

Gabor Filters

Gabor filters are yet another technique for spectral analysis. These filters provide the best possible tradeoff for Heisenberg's uncertainty principle, since they exactly meet the Heisenberg Uncertainty Limit. They offer the best compromise between spatial and frequency resolution. Their use for image analysis is also biologically motivated, since they model the response of the receptive fields of the orientation-selective cells in the human visual cortex.

-

Comparative analysis and Implementation

-

STFT

Windowing dilemma, processing windows and signal cannot be reconstructed.

-

Wavelets

Wavelets output at a higher frequencies are generally not so smooth and orientation selectivity is poor. Wavelets are narrow in their capabilities.

However, wavelets are much faster to compute than Gabor filters and provide a more compact representation for the same number of orientations, which motivated their selection over Gabor filters.

-

-

Chrominance/Luminance Attributes

Color is one of the most obvious and pervasive qualities in our surrounding world, having important functions for perceiving accurately forms, identifying objects and carrying out tasks important for our survival.

-

Two of the main functions of color vision are:

-

Creating perceptual segregation

This is the process of estimating the boundaries of objects, which is a crucial ability for survival in many species.

-

Signaling

Color also has a signaling function. Signals can result from the detection of bright colors or from the health index given by a person's skin color or a peacock's plumage.

-

-

-

-

-

Visual Pathway

-

Developmental Issues

Object detection and segmentation are key abilities on top of which other capabilities might be developed, such as object recognition. Both object segmentation and recognition are intertwined problems which can then be cast under a developmental framework: models of objects are first learned from experimental human/robot manipulation, enabling their a-posteriori identification with or without the agents actuation.

-

Object Recognition from Visual Local Features

Recognition of objects has to occur over a variety of scene contexts. This led tothe development of an object recognition scheme to recognize objects from color, luminance and shape cues, or from combinations of them. The object recognition algorithm consists therefore of three independent algorithms. Each recognizer operates along (nearly) orthogonal directions to the others over the input space. This approach offers the possibility of priming specific information, such as searching for a specific object feature (color, shape or luminance) independently of the others. For instance, the recognizer may focus the search on a specific color or sets of colors, or look into both desired shapes and luminance.

-

-

Important features of different vision sensors

-

Time Of Flight Imagers

- Measures time of flight required for a single laser pulse to leave a camera and reflect back

- Records full 3D scenes using single laser pulse

- Rapid acquisition and rapid real-time processing of scene information

- Phase-shift time of flight imagers measurement working range = 10-30m Direct time of flight imagers measurement working range = 1500m

- Costs lower than that of LIDAR

- Lower power consumptions with respect to laser scanners

- Unlike stereo imaging, depth accuracy is practically independent of textural appearance

-

Stereo Cameras

- Type of cameras with 2 or more lenses with separate image sensor for each lens

- Uses a method called stereo photography to capture images Stereo Photography: Method of distilling a noisy video signal into a coherent data set that a computer can process into actionable symbolic objects

- They perform well in both indoor and outdoor applications

- The sensor system generates a depth map in which each pixel represents a distance to the sensor. As a result, it can detect not only the presence of objects in the danger zone, but also their relative size and position

- Because of its simple design, a stereo camera has considerable cost advantages over currently approved technologies. Two standard industrial cameras are fixed at a defined angle to one another. The adjustable lenses can be quickly adapted to fit the required scenario

- A stereo camera can provide a higher reliability than is currently required. The sensor system has no moving parts, thus reducing wear and tear and making it less prone to errors.

-

3D LIDARS

- 3D laser imaging systems operate at night in all ambient illuminations and weather conditions. These techniques can perform the strategic surveillance of the environment for various worldwide operations (up to long ranges)

- Vertical accuracy 5-15 cm (1s), Horizontal accuracy 30-50 cm

- Data collection independent of sun inclination and at night and slightly bad weather

- LiDAR pulses can reach beneath the canopy thus generating measurements of points there unlike photogrammetry

- Up to 167,000 pulses per second. More than 24 points per m2 can be measured. Multiple returns to collect data in 3D.

- LiDAR also observes the amplitude of back scatter energy thus recording a reflectance value for each data point. This data, though poor spectrally, can be used for classification, as at the wavelength used some features may be discriminated accurately.

-

-

Comparison of vision sensors

-

Vision Sensors

-

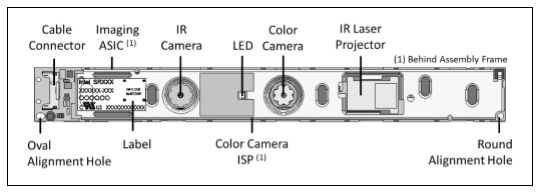

Intel RealSense 3D camera SR300

-

Components placement:

-

Components used:

- Cable connector

- Imaging ASIC

- IR camera

- LED

- Colour camera

- IR laser projector

- Colour camera ISP

-

3D Imaging system

- The IR projector and IR camera operate in tandem using coded light patterns to produce 2D array of monochromatic pixel values

- The values are processed by imaging ASIC to generate depth

- The colour camera consists of chromatic sensor and image signal processor which capture and processes chromatic pixel values

- These values generate colour video frames which are transmitted to the imaging ASIC and then transmitted to client values

- Colour camera can function independently from thr infrared camera or synchronously to create colour+IR+ depth video frame

-

-

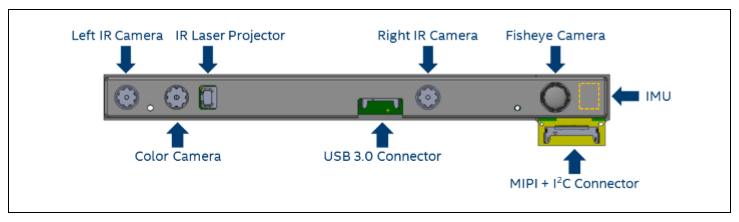

Intel RealSense 3D camera ZR300

-

Components used:

-

Left IR camera

-

Colour camera

-

IR laser projection

-

USB 3.0 connector

-

Right IR camera

-

Fisheye camera

-

IMU

-

MIPI + I^2C connector

Components placement:

-

-

3D imaging system:

- They use left IR, right IR and IR laser projector to calculate the depth and then the left and right camera data is sent to ASIC

- ASIC calculates the depth value for each pixel in the image

- IR projector is used to enhance the ability of the system to calculate depth in scenes with low amount of texture

- Camera module output type is a depth measurement from the parallel plane of the module and not the absolute range from the module cameras

-

Advantages of using fisheye camera

- It creates an image that replicates a circle or barrel with 180 degree or 360 degree wide FOV.

- The curve of the fisheye lens can see in all the directions at the same time

-

-

-

Phase 2 Requirement analysis

Requirements

- Recognize objects and operators(users)

- Calculate relative distance of objects from itself

- Identify orientation of floor to aid in COG balancing and path planning

- Recognize colors of objects and provide audio confirmation of color detected

- Track object movement, and change its orientation to fit object into its FOV

- Produce 3D-model of the environment

- Work in real-time

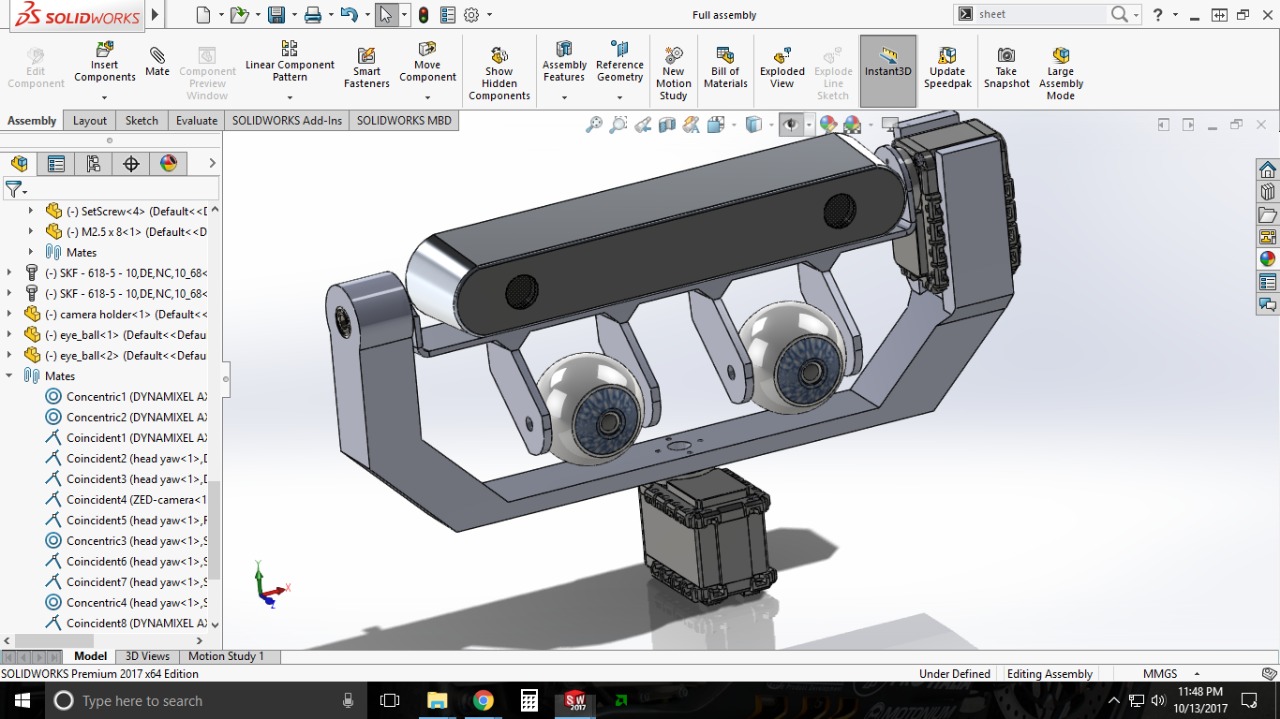

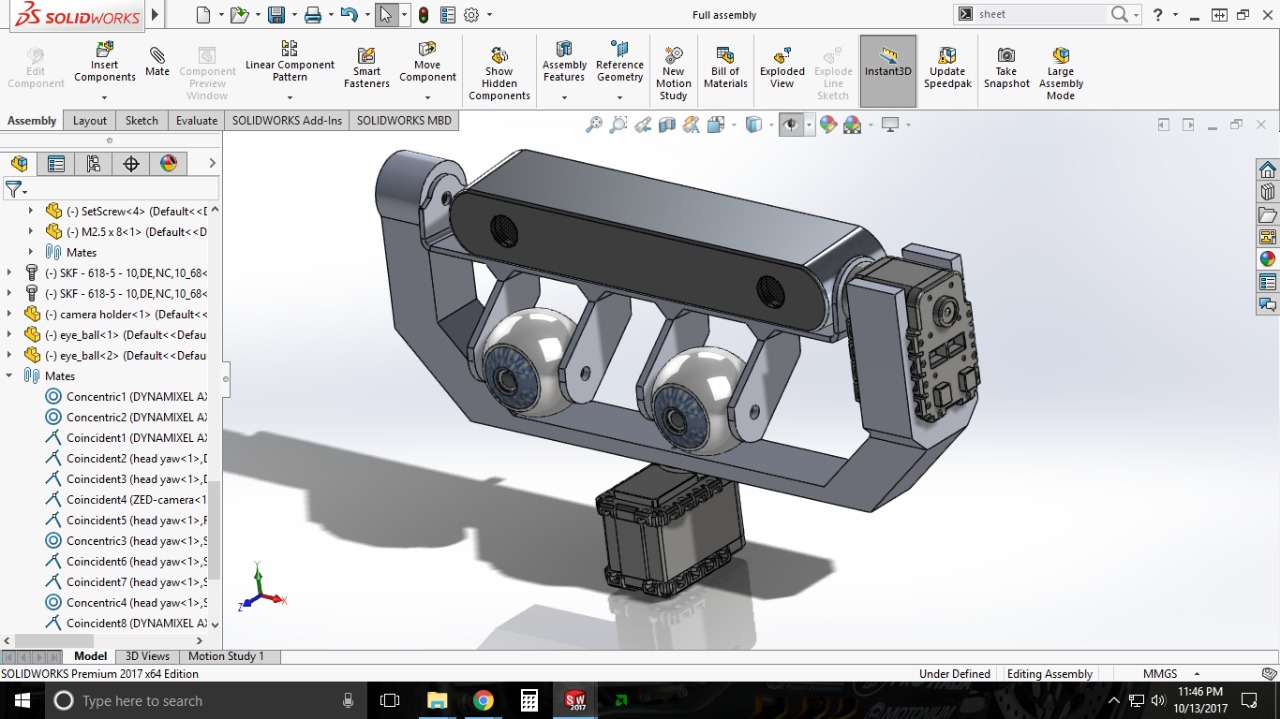

- Fit into the head model which resembles head of a six year old child

- Have wide FOV

- Compact size

- Have noise cancellation feature so that no wrong commands are interpreted by the sensor

- Detect command to be executed

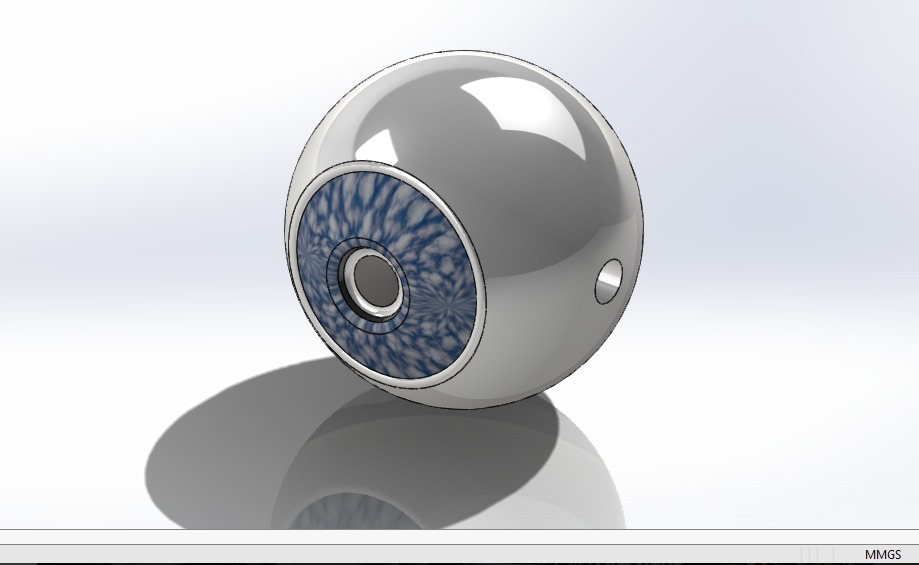

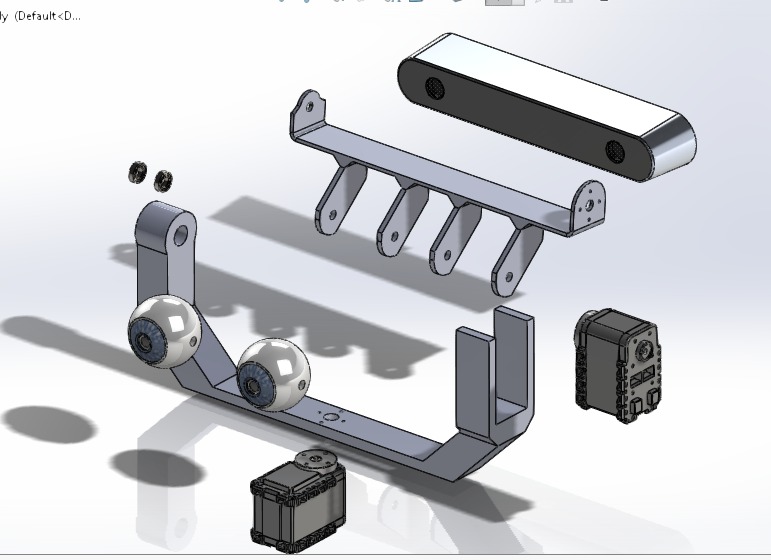

- Resemble human eyes

- Show emotions by moving eyes while thinking(processing)

- Provide audio output

- Audio output should be clean and clear

- Sustain load of all the components

- Have inbuilt gear system so that complexity of the design is reduced

- Have feedback system

- Accurate and precise

- Durable

- Motor size should be small and compact, such that it fits into the space available

- Motors placement should be modular

- DOF should be such that it can resemble human head movement

- Cost-efficient

- Light-weight

- Respond quickly

- Have independent and modular controller

- Design should be stable

- Upgradability feature so that any changes necessary can be made

- Follow robot laws to ensure safety of users and itself

- Cognitive behavior

Engineering Specifications

| Serial Number | Requirement | Nominal value | Ideal value |

|---|---|---|---|

| 1 | Recognize objects and operators(users) | - | - |

| 2 | Calculate relative distance of objects from itself | 2.5m | 3m |

| 3 | Identify orientation of floor to aid in COG balancing and path planning | - | - |

| 4 | Recognize colors of objects and provide audio confirmation of color detected | 390nm-700nm | 390nm-700nm |

| 5 | Track object movement, and change its orientation to fit object into its FOV | ||

| 6 | Produce 3D-model of the environment | - | - |

| 7 | Work in real-time | ||

| 8 | Fit into the head model which resembles head of a six year old child | 14x12x10 | 12x12x12 |

| 9 | Have wide FOV | 166(deg) | 180(deg) |

| 10 | Compact in size | <14x12x10 | 12x12x12 |

| 11 | Have noise cancellation feature so that no wrong commands are interpreted by the sensor | - | - |

| 12 | Detect command to be executed | 80dB | 140dB |

| 13 | Resemble human eyes | - | - |

| 14 | Show emotions by moving eyes while thinking(processing) | - | - |

| 15 | Provide audio output | 144dB | 140dB |

| 16 | Audio output should be clean and clear | - | - |

| 17 | Sustain load of all the components | 3.5kg | 2kg |

| 18 | Have inbuilt gear system so that complexity of the design is reduced | - | - |

| 19 | Have feedback system | - | - |

| 20 | Accurate and precise | - | - |

| 21 | Durable | 8yrs | 10yrs |

| 22 | Motor size should be small and compact, such that it fits into the space available | <14x12x10 | 12x12x12 |

| 23 | Motors placement should be modular | - | - |

| 24 | DOF should be such that it can resemble human head movement | 2 | 6 |

| 25 | Cost-efficient | 25000 | 20000 |

| 26 | Light-weight | 3kg | 2kg |

| 27 | Respond quickly | ||

| 28 | Have independent and modular controller | - | - |

| 29 | Design should be stable | - | - |

| 30 | Upgradability feature so that any changes necessary can be made | - | - |

| 31 | Follow robot laws to ensure safety of users and itself | - | - |

| 32 | Cognitive behavior | - | - |

Phase 3 Functional analysis

-

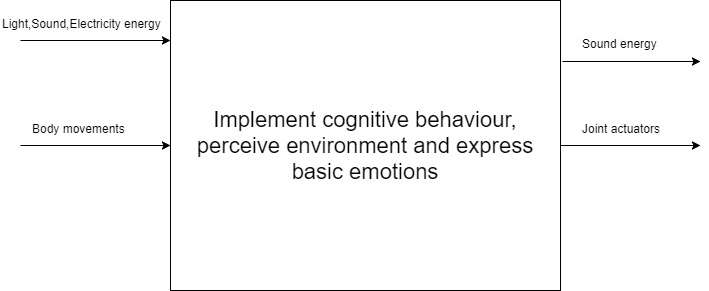

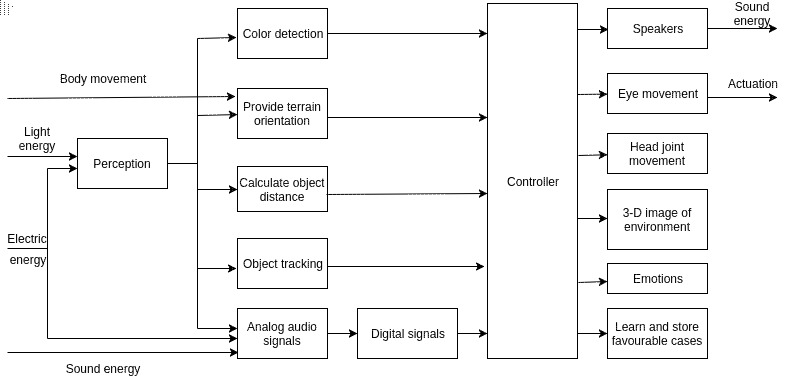

Black Box

-

White Box

-

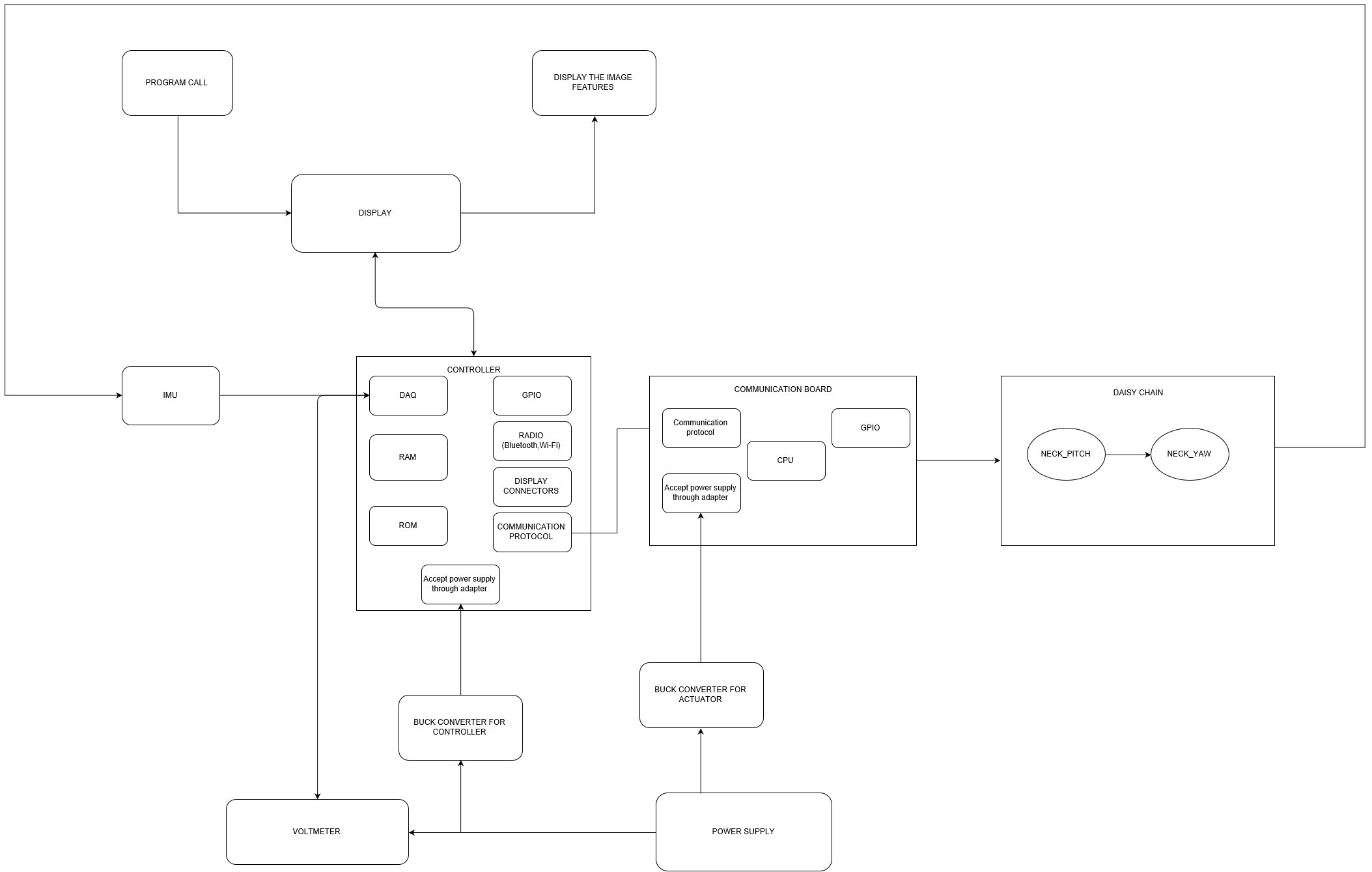

Hardware architecture

-

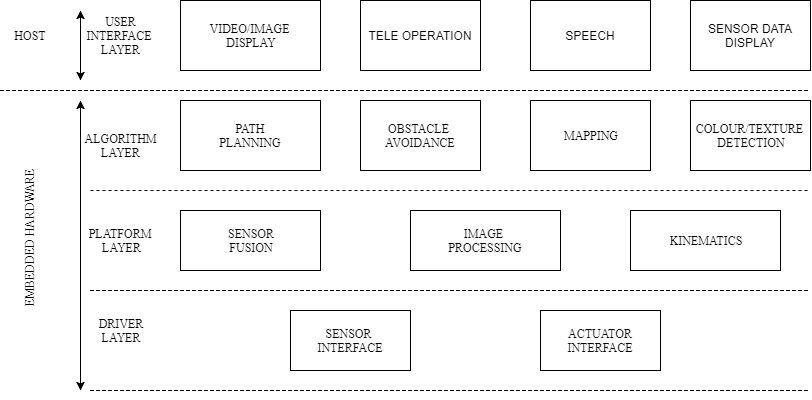

Software architecture

Phase 4 Conceptualization

-

Morphological chart

Functions Solution 1 Solution 2 Solution 3 Solution 4 Actuation Maxon geared DC motor Cube servo Converting electrical signals into sound signals VEX Speaker Converting sound signals into electrical signals Lapel microphone Perception Intel realsense SR300 Intel realsense ZR300 ZED Connectivity ethernet wifi Bluetooth usb Power transmission Gears belt Controllers Arduino Raspberry PI Beaglebone Toradex Motors Cytron G-15 cube servo MG996R digital servo Dynamexell

-

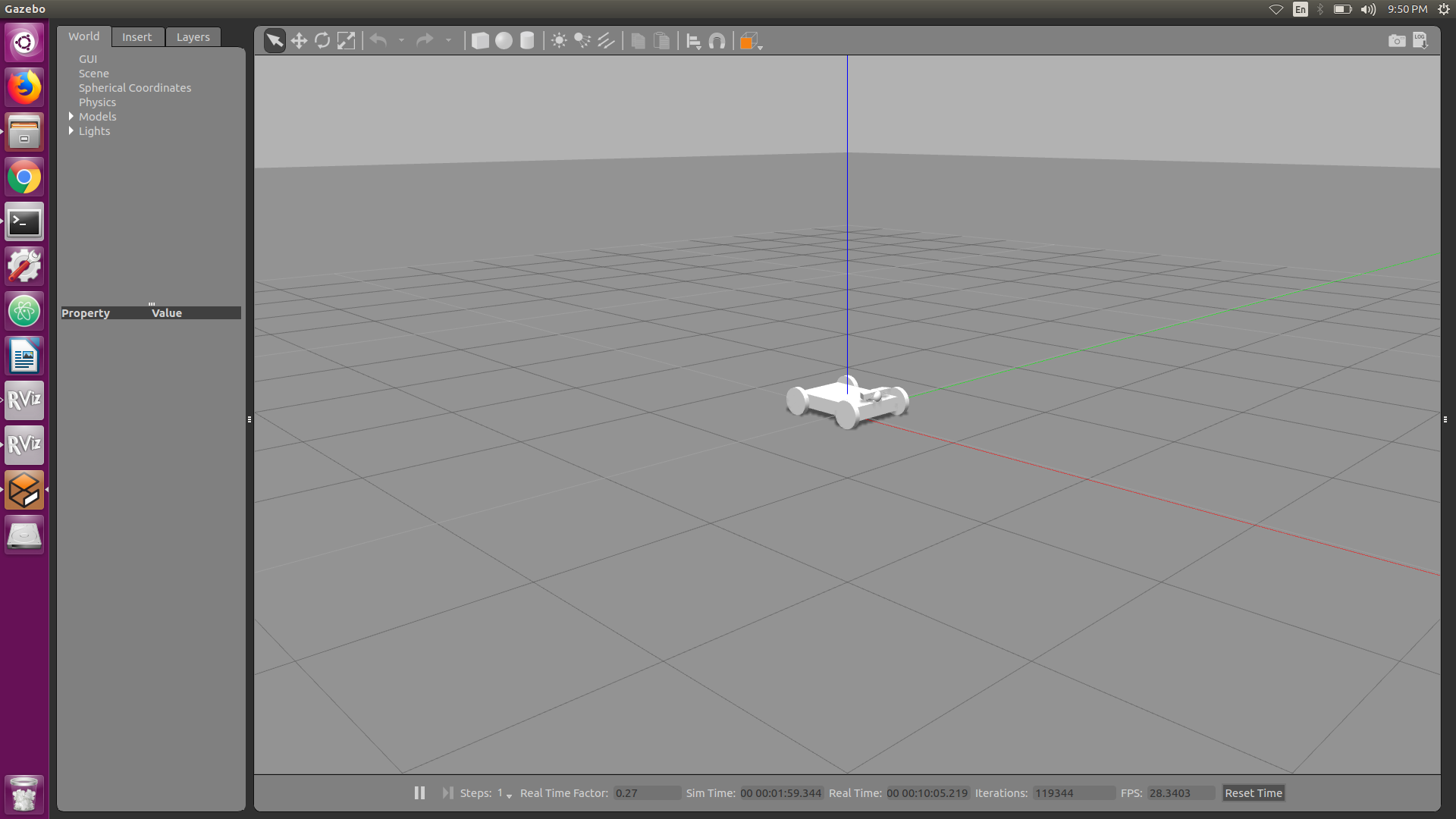

Simulation

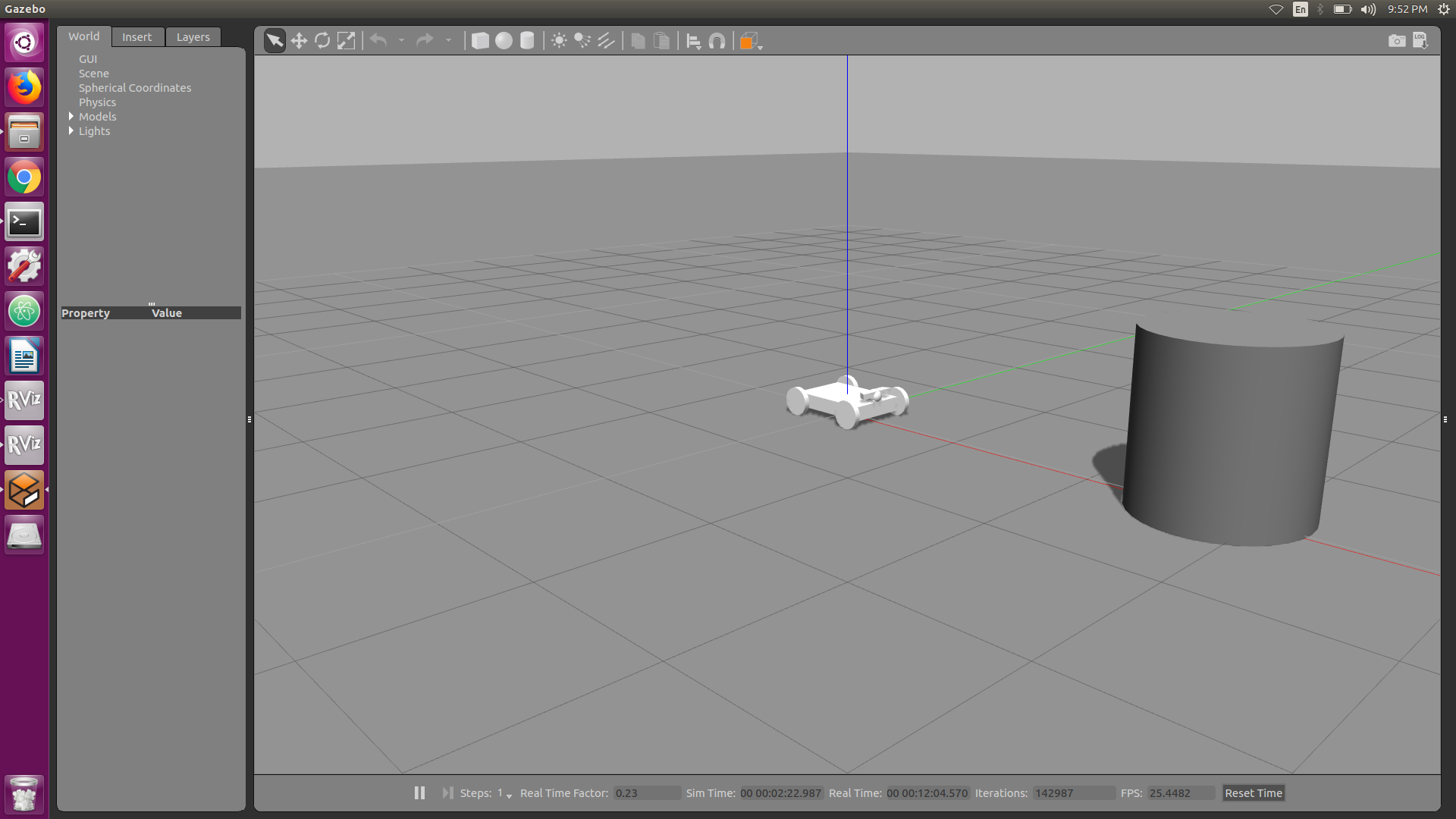

Importing robot to gazebo

Placing obstacle in gazebo

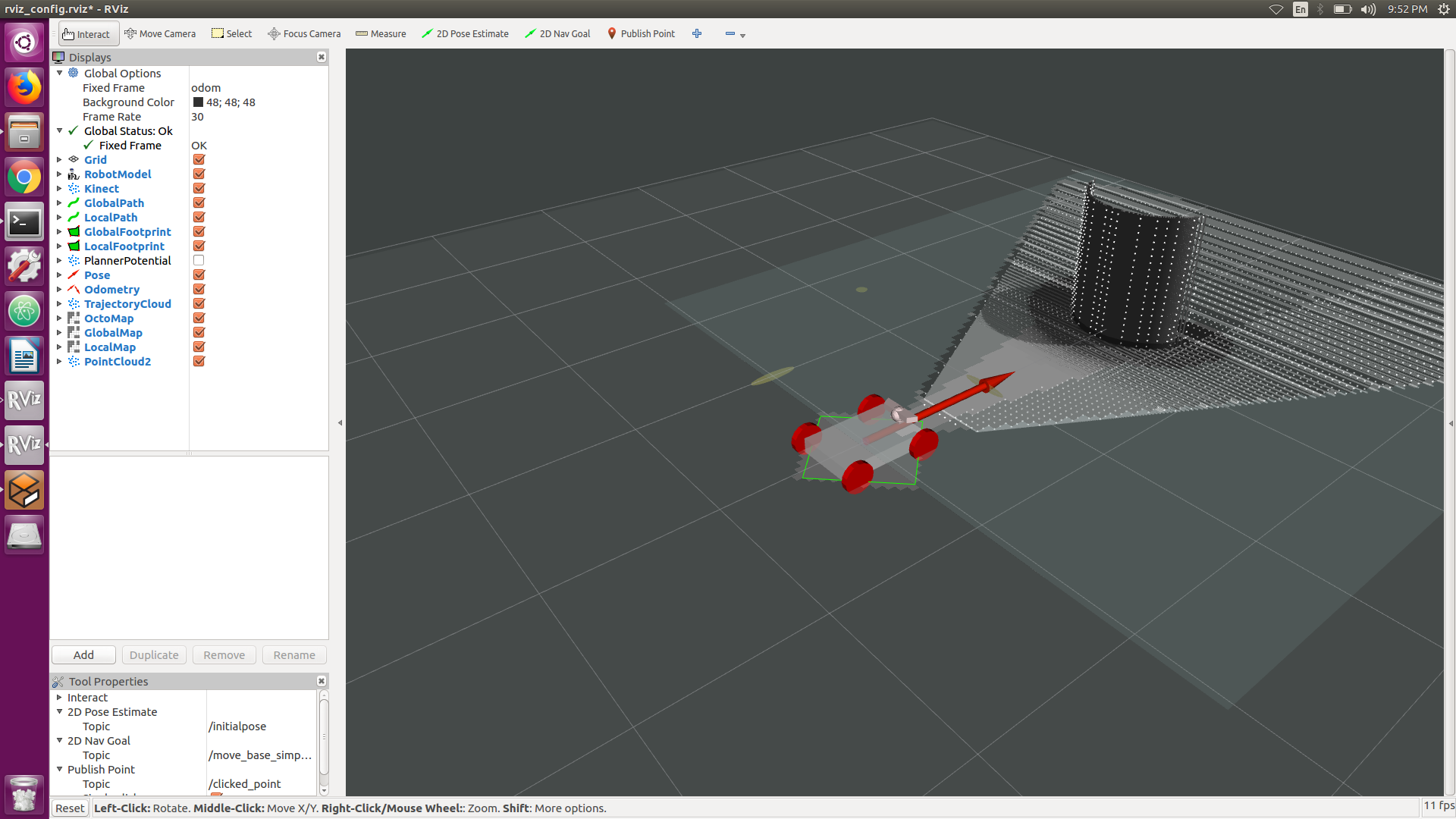

Depth image of obstacle in rviz

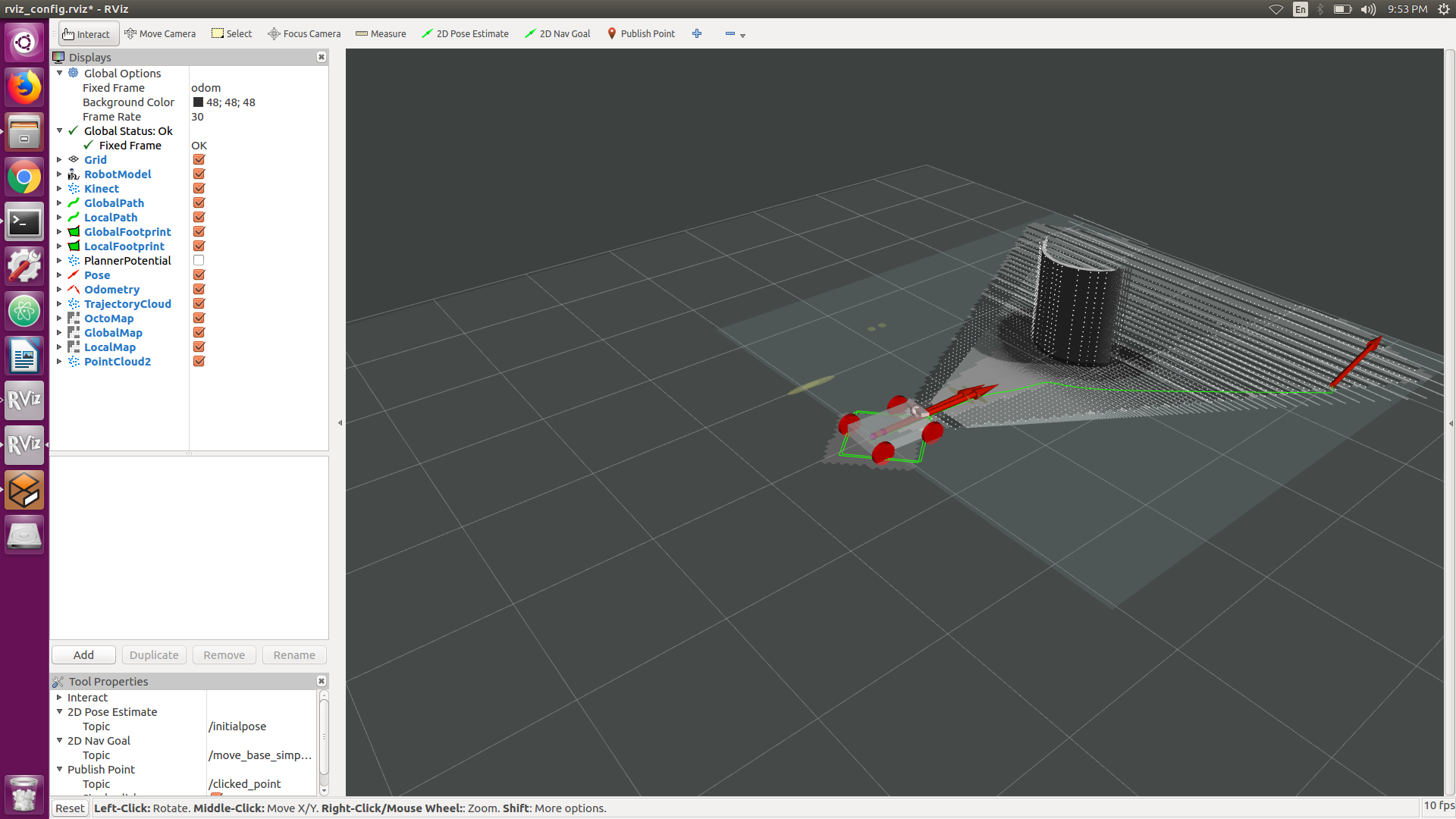

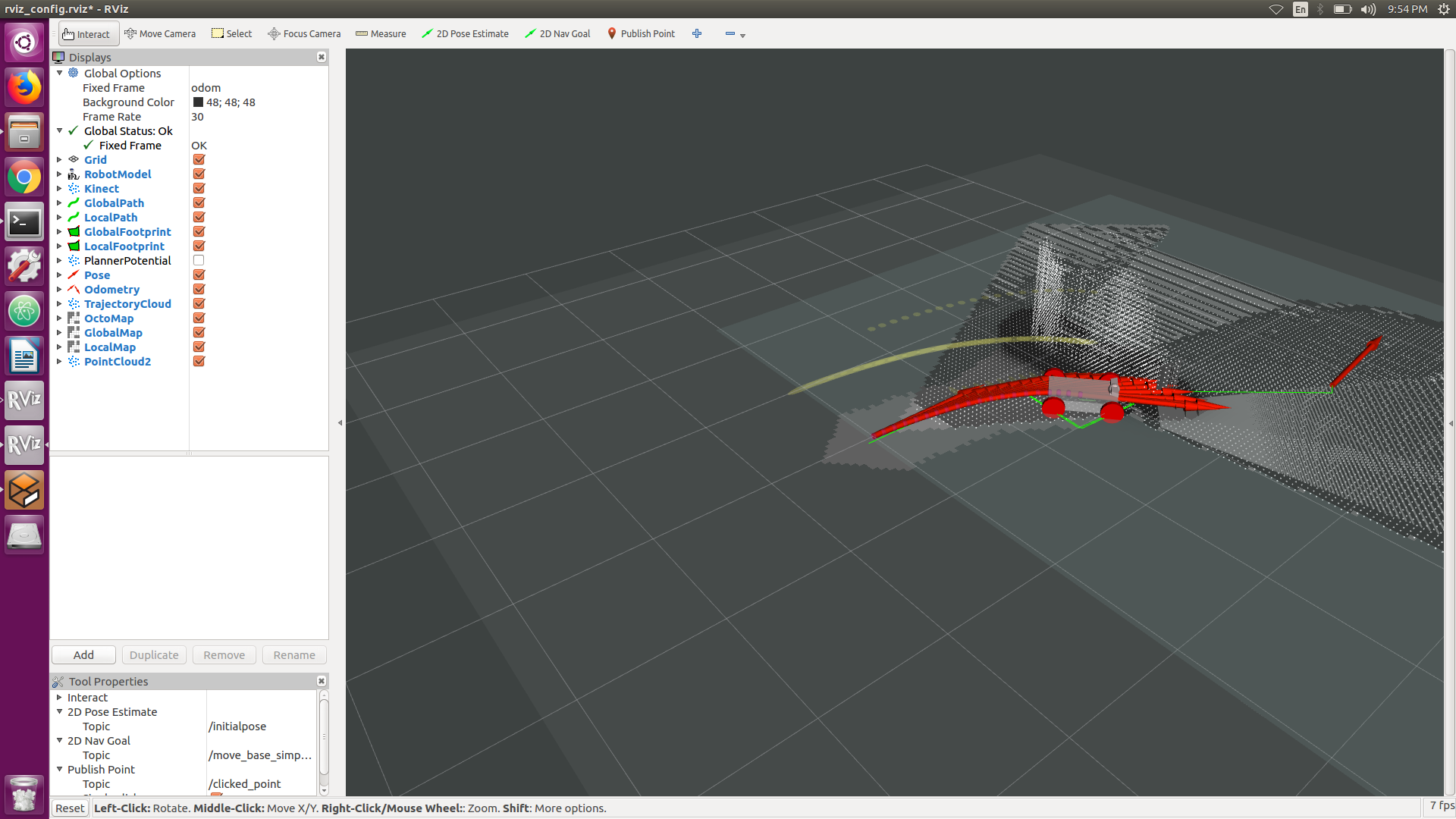

Path generation

Obstacle avoidance

-

Motor Selection

Dynamixel MX28-t Dynamixel MX28-t Cube Servo Operating Voltage 12v 14.8v 6.5-17.8v Torque 25.5kg-cm 31.6kg-cm 15kg-cm No-load Speed 55 rpm 67 rpm 63 rpm Current 1.4 A 1.4 A 1.5 A Weight 72gm 72gm 63gm Operating Angle 360deg 360deg 360deg Protocol TTL Asynchronous Serial TTL Asynchronous Serial Half duplex serial with daisy chain Position Feedback Yes Yes Yes Load voltage Feedback Yes Yes Yes Temperature Feedback Yes Yes Yes

-

Conceptualization

Phase 5: Prototyping

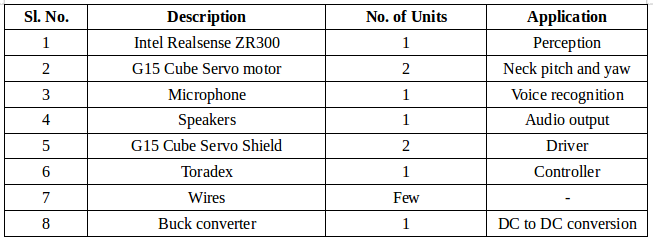

Bill of Materials